Archive

A Pointed note on Martin Luther King, Jr Day

Three years ago I was out for a walk in Haiti when I met this boy who asked me to photograph him pointing to God. Aggrieved by the president’s recent disrespectful comments about Haiti, I am posting this as a reminder of what even the youngest of Haitian children know: There is a power higher than that of any president.

The Chef Shortage, Explained

This is a guest post by Sam Kanson-Benanav, a chef who has managed restaurants in Minnesota, Wisconsin, and New York City. He spent two years in the studying global resource marketplaces in the Amazon rainforest, and his favorite food is a french omelet.

Despite my desperate attempts at a career change, I’ve become fairly inured to the fact I work in one of the most job secure industries in America. And I’m not a tenured professor.

I am a professional restaurant person – cook, manager, server, and bartender (on nights when a bartender doesn’t show up). As a recent Washington Post article highlights: it has become increasingly more difficult for kitchens to staff their teams with proper talent. We could ponder a litany of reasons why talented cooks are not flocking to the kitchens, but if you prefer to stop reading now, just reference Mathbabe’s entirely accurate post on labor shortages.

Or, we could just pay cooks more. As it turns out, money is a very effective motivator, but restaurants employ two cannibalizing labor models based on fundamentally contrasting motivators: tipping and wages. I’ll take these on separately.

Tipping servers suppress wages for the kitchen

We already know tipping is a bad system, which bears less correlation to the actual quality of service you receive than to the color or gender of your server. It’s an external rewards based system akin to paying your employees a negligible wage with a constant cash bonus, a historically awful way to run a business.

In other words, restaurant owners are able to pass off the cost of labor for employing servers onto their consumers. That means they factor into their menu prices only the cost of labor for the kitchen, which remains considerable in the labor-intensive low margin restaurant world. Thankfully, we are all alcoholics and willing to pay 400% markups on our beer and only a 30% markup on our burgers. Nevertheless, the math here rarely works in a cook’s favor.

For a restaurant to remain a viable business, a cook (and dishwasher’s) hourly wage must be low, even as bartenders and servers walk away with considerable more cash.

In the event that a restaurant, under this conventional model, would like to raise its prices and better compensate its cooks, it cannot do so without also raising wages for its servers. Every dollar increase in the price of line item on your receipt increases a consumers cost by $1.20 , the server happily pocketing the difference.

Unfair? Yes. Inefficient? Certainly. Is change possible? Probably not.

Let’s assume change is possible

Some restaurants are doing away with this trend, in a worthy campaign to better price the cost of your meal, and compensate cooks more for their work. These restaurants charge a 20% administration fee, which becomes part of their combined revenue—the total pool of cash from which they can pay all their employees at set hourly rates.

That’s different then an automatic service fee you might find at the end of your bill at a higher end restaurant or when dining with a large group. It’s a pre tax charge that repackages the cost of a meal by charging a combined 30% tax on the consumer (8% sales tax on 20% service tax) allowing business owners to allocate funds for labor at their discretion rather than obligate them to give it all to service staff.

Under this model cooks now may make a stunning $15-18 an hour, up from $12-$13, and servers $20-30, which is yes, down from their previous wages. That’s wealth redistribution in the restaurant world! For unscrupulous business owners, it could also incentive further wealth suppression by minimizing the amount a 20% administration fee that is utilized for labor, as busier nights no longer translate into higher tips for the service staff.

I am a progressive minded individual who recognizes the virtue of (sorry server, but let’s face it) fairer wages. Nevertheless, I’m concerned the precedents we’ve set for ourselves will make unilateral redistribution a lofty task.

There is not much incentive for an experienced server to take a considerable pay cut. The outcome is likelier to blur the lines between who is a server and who is a cook, or, a dilution in the level of service generally.

Wage Growth

Indeed wages are rising in the food industry, but at a paltry average of $12.48 an hour, there’s considerable room for growth before cooking becomes a viable career choice for the creative minded and educated talent the industry thirsts for. Celebrity chefs may glamorize the industry, but their presence in the marketplace is more akin to celebrity than chef, and their salaries have little bearing on real wage growth of labor force.

Unlike most other industries, a cook’s best chance and long term financial security is to work their way into ownership. Cooking is not an ideal position to age into: the physicality of the work and hours only become more grueling, and your wages will not increase substantially with time. This all to say – if the restaurant industry wants more cooks, it needs to be willing to pay a higher price upfront for them. This is not just a New York problem complicated by sky high rents. It’s as real in Wisconsin as it is Manhattan.

Ultimately paying cooks more is a question of reconciling two contrasting payment models. That’s a question of redistribution.

But “whoa Sam – you are a not an economist, this is purely speculative!” you say?

Possibly, and so far at least a couple of restaurants have been able to maintain normal operations under these alternative models, but their actions alone are unlikely to fill the labor shortage we see. Whether we are ultimately willing to pay servers less or pay considerably more for our meals remains to be seen, but, for what its worth, I’m currently looking for a serving job and I can tell you a few places I’m not applying to.

Guest post: Open-Source Loan-Level Analysis of Fannie and Freddie

This is a guest post by Todd Schneider. You can read the full post with additional analysis on Todd’s personal site.

[M]ortgages were acknowledged to be the most mathematically complex securities in the marketplace. The complexity arose entirely out of the option the homeowner has to prepay his loan; it was poetic that the single financial complexity contributed to the marketplace by the common man was the Gordian knot giving the best brains on Wall Street a run for their money. Ranieri’s instincts that had led him to build an enormous research department had been right: Mortgages were about math.

The money was made, therefore, with ever more refined tools of analysis.

—Michael Lewis, Liar’s Poker (1989)

Fannie Mae and Freddie Mac began reporting loan-level credit performance data in 2013 at the direction of their regulator, the Federal Housing Finance Agency. The stated purpose of releasing the data was to “increase transparency, which helps investors build more accurate credit performance models in support of potential risk-sharing initiatives.”

The GSEs went through a nearly $200 billion government bailout during the financial crisis, motivated in large part by losses on loans that they guaranteed, so I figured there must be something interesting in the loan-level data. I decided to dig in with some geographic analysis, an attempt to identify the loan-level characteristics most predictive of default rates, and more. The code for processing and analyzing the data is all available on GitHub.

The “medium data” revolution

In the not-so-distant past, an analysis of loan-level mortgage data would have cost a lot of money. Between licensing data and paying for expensive computers to analyze it, you could have easily incurred costs north of a million dollars per year. Today, in addition to Fannie and Freddie making their data freely available, we’re in the midst of what I might call the “medium data” revolution: personal computers are so powerful that my MacBook Air is capable of analyzing the entire 215 GB of data, representing some 38 million loans, 1.6 billion observations, and over $7.1 trillion of origination volume. Furthermore, I did everything with free, open-source software.

What can we learn from the loan-level data?

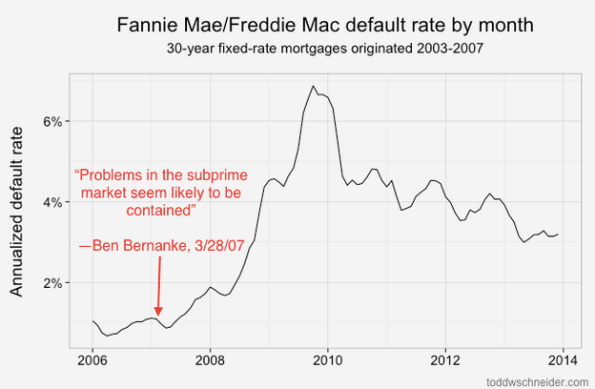

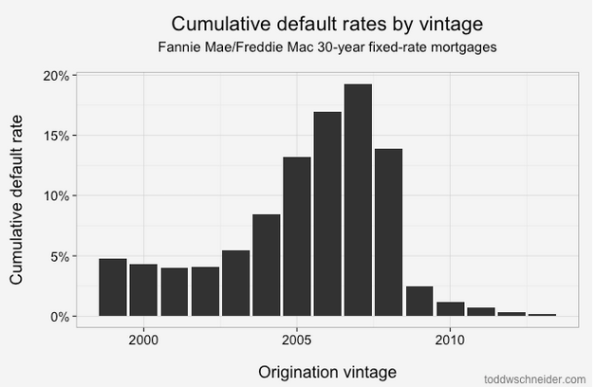

Loans originated from 2005-2008 performed dramatically worse than loans that came before them! That should be an extraordinarily unsurprising statement to anyone who was even slightly aware of the U.S. mortgage crisis that began in 2007:

About 4% of loans originated from 1999 to 2003 became seriously delinquent at some point in their lives. The 2004 vintage showed some performance deterioration, and then the vintages from 2005 through 2008 show significantly worse performance: more than 15% of all loans originated in those years became distressed.

From 2009 through present, the performance has been much better, with fewer than 2% of loans defaulting. Of course part of that is that it takes time for a loan to default, so the most recent vintages will tend to have lower cumulative default rates while their loans are still young. But there has also been a dramatic shift in lending standards so that the loans made since 2009 have been much higher credit quality: the average FICO score used to be 720, but since 2009 it has been more like 765. Furthermore, if we look 2 standard deviations from the mean, we see that the low end of the FICO spectrum used to reach down to about 600, but since 2009 there have been very few loans with FICO less than 680:

Tighter agency standards, coupled with a complete shutdown in the non-agency mortgage market, including both subprime and Alt-A lending, mean that there is very little credit available to borrowers with low credit scores (a far more difficult question is whether this is a good or bad thing!).

Geographic performance

Default rates increased everywhere during the bubble years, but some states fared far worse than others. I took every loan originated between 2005 and 2007, broadly considered to be the height of reckless mortgage lending, bucketed loans by state, and calculated the cumulative default rate of loans in each state:

4 states in particular jump out as the worst performers: California, Florida, Arizona, and Nevada. Just about every state experienced significantly higher than normal default rates during the mortgage crisis, but these 4 states, often labeled the “sand states”, experienced the worst of it.

Read more

If you’re interested in more technical discussion, including an attempt to identify which loan-level variables are most correlated to default rates (the number one being the home price adjusted loan to value ratio), read the full post on toddwschneider.com, and be sure to check out the project on GitHub if you’d like to do your own data analysis.

Gender And The Harvard Math Department

This is a guest post by Meena Boppana, a junior at Harvard and former president of the Harvard Undergraduate Math Association (HUMA). Meena is passionate about addressing the gender gap in math and has co-lead initiatives including the Harvard math survey and the founding of the Harvard student group Gender Inclusivity in Math (GIIM).

I arrived at Harvard in 2012 head-over-heels in love with math. Encouraged to think mathematically since I was four years old by my feminist mathematician dad, I had even given a TEDx talk in high school declaring my love for the subject. I was certainly qualified and excited enough to be a math major.

Which is why, three years later, I think about how it is that virtually all my female friends with insanely strong math backgrounds (e.g. math competition stars) decided not to major in math (I chose computer science). This year, there were no female students in Math 55a, the most intense freshman math class, and only two female students graduating with a primary concentration in math. There are also a total of zero tenured women faculty in Harvard math.

So, I decided to do some statistical sleuthing and co-directed a survey of Harvard undergraduates in math. I was inspired by the work of Nancy Hopkins and other pioneering female scientists at MIT, who quantified gender inequities at the Institute – even measuring the square footage of their offices – and sparked real change. We got a 1/3 response rate among all math concentrators at Harvard, with 150 people in total (including related STEM concentrations) filling it out.

The main finding of our survey analysis is that the dearth of women in Harvard math is far more than a “pipeline issue” stemming from high school. So, the tale that women are coming in to Harvard knowing less math and consequently not majoring in math is missing much of the picture. Women are dropping out of math during their years at Harvard, with female math majors writing theses and continuing on to graduate school at far lower rates than their male math major counterparts.

And it’s a cultural issue. Our survey indicated that many women would like to be involved in the math department and aren’t, most women feel uncomfortable as a result of the gender gap, and women feel uncomfortable in math department common spaces.

Source: Harvard Math Survey

The simple act of talking about the gender gap has opened the floodgates to great conversations. I had always assumed that because no one was talking about the gender gap, no one cared. But after organizing a panel on gender in the math department which drew 150 people with a roughly equal gender split and students and faculty alike, I realized that my classmates of all genders feel more disempowered than apathetic.

The situation is bad, but certainly not hopeless. Together with a male freshman math major, I am founding a Harvard student group called Gender Inclusivity in Math (GIIM). The club has the two-fold goal of increasing community among women in math, including dinners, retreats, and a women speaker series, and also addressing the gender gap in the math department, continuing the trend of surveys and gender in math discussions. The inclusion of male allies is central to our club mission, and the support from male allies at the student and faculty level that we have received makes me optimistic about the will for change.

Ultimately, it is my continued love for math which has driven me to take action. Mathematics is too beautiful and important to lose 50 percent (or much more when considering racial and class-based inequities) of the potential population of math lovers.

Nick Kristof is not Smarter than an 8th Grader

This is a post by Eugene Stern, originally posted on his blog sensemadehere.wordpress.com.

About a week ago, Nick Kristof published this op-ed in the New York Times. Entitled Are You Smarter than an 8th Grader, the piece discusses American kids’ underperformance in math compared with students from other countries, as measured by standardized test results. Kristof goes over several questions from the 2011 TIMSS (Trends in International Mathematics and Science Study) test administered to 8th graders, and highlights how American students did worse than students from Iran, Indonesia, Ghana, Palestine, Turkey, and Armenia, as well as traditional high performers like Singapore. “We all know Johnny can’t read,” says Kristof, in that finger-wagging way perfected by the current cohort of New York Times op-ed columnists; “it appears that Johnny is even worse at counting.”

The trouble with this narrative is that it’s utterly, demonstrably false.

My friend Jordan Ellenberg pointed me to this blog post, which highlights the problem. In spite of Kristof’s alarmism, it turns out that American eighth graders actually did quite well on the 2011 TIMSS. You can see the complete results here. Out of 42 countries tested, the US placed 9th. If you look at the scores by country, you’ll see a large gap between the top 5 (Korea, Singapore, Taiwan, Hong Kong, and Japan) and everyone else. After that gap comes Russia, in 6th place, then another gap, then a group of 9 closely bunched countries: Israel, Finland, the US, England, Hungary, Australia, Slovenia, Lithuania, and Italy. Those made up, more or less, the top third of all the countries that took the test. Our performance isn’t mind-blowing, but it’s not terrible either. So what the hell is Kristof talking about?

You’ll find the answer here, in a list of 88 publicly released questions from the test (not all questions were published, but this appears to be a representative sample). For each question, a performance breakdown by country is given. When I went through the questions, I found that the US placed in the top third (top 14 out of 42 countries) on 45 of them, the middle third on 39, and the bottom third on 4. This seems typical of the kind of variance usually seen on standardized tests. US kids did particularly well on statistics, data interpretation, and estimation, which have all gotten more emphasis in the math curriculum lately. For example, 80% of US eighth graders answered this question correctly:

Which of these is the best estimate of (7.21 × 3.86) / 10.09?

(A) (7 × 3) / 10 (B) (7 × 4) / 10 (C) (7 × 3) / 11 (D) (7 × 4) / 11

More American kids knew that the correct answer was (B) than Russians, Finns, Japanese, English, or Israelis. Nice job, kids! And let’s give your teachers some credit too!

But Kristof isn’t willing to do either. He has a narrative of American underperformance in mind, and if the overall test results don’t fit his story, he’ll just go and find some results that do! Thus, the examples in his column. Kristof literally went and picked the two questions out of 88 on which the US did the worst, and highlighted those in the column. (He gives a third example too, a question in which the US was in the middle of the pack, but the pack did poorly, so the US’s absolute score looks bad.) And, presto! — instead of a story about kids learning stuff and doing decently on a test, we have yet another hysterical screed about Americans “struggling to compete with citizens of other countries.”

Kristof gives no suggestions for what we can actually do better, by the way. But he does offer this helpful advice:

Numeracy isn’t a sign of geekiness, but a basic requirement for intelligent discussions of public policy. Without it, politicians routinely get away with using statistics, as Mark Twain supposedly observed, the way a drunk uses a lamppost: for support rather than illumination.

So do op-ed columnists, apparently.

Guest Post: A Discussion Of PARCC Testing

This is a guest post by Eugene Stern, who writes a blog at Sense Made Here, and Kristin Wald, who writes a blog at This Unique* Weblog. Crossposted on their blogs as well.

Today’s post is a discussion of education reform, standardized testing, and PARCC with my friend Kristin Wald, who has been extremely kind to this blog. Kristin taught high school English in the NYC public schools for many years. Today her kids and mine go to school together in Montclair. She has her own blog that gets orders of magnitude more readers than I do.

ES: PARCC testing is beginning in New Jersey this month. There’s been lots of anxiety and confusion in Montclair and elsewhere as parents debate whether to have their kids take the test or opt out. How do you think about it, both as a teacher and as a parent?

KW: My simple answer is that my kids will sit for PARCC. However, and this is where is gets grainy, that doesn’t mean I consider myself a cheerleader for the exam or for the Common Core curriculum in general.

In fact, my initial reaction, a few years ago, was to distance my children from both the Common Core and PARCC. So much so that I wrote to my child’s principal and teacher requesting that no practice tests be administered to him. At that point I had only peripherally heard about the issues and was extending my distaste for No Child Left Behind and, later, Race to the Top. However, despite reading about and discussing the myriad issues, I still believe in change from within and trying the system out to see kinks and wrinkles up-close rather than condemning it full force.

Standards

ES: Why did you dislike NCLB and Race to the Top? What was your experience with them as a teacher?

KW: Back when I taught in NYC, there was wiggle room if students and schools didn’t meet standards. Part of my survival as a teacher was to shut my door and do what I wanted. By the time I left the classroom in 2007 we were being asked to post the standards codes for the New York State Regents Exams around our rooms, similar to posting Common Core standards all around. That made no sense to me. Who was this supposed to be for? Not the students – if they’re gazing around the room they’re not looking at CC RL.9-10 next to an essay hanging on a bulletin board. I also found NCLB naïve in its “every child can learn it all” attitude. I mean, yes, sure, any child can learn. But kids aren’t starting out at the same place or with the same support. And anyone who has experience with children who have not had the proper support up through 11th grade knows they’re not going to do well, or even half-way to well, just because they have a kickass teacher that year.

Regarding my initial aversion to Common Core, especially as a high school English Language Arts teacher, the minimal appearance of fiction and poetry was disheartening. We’d already seen the slant in the NYS Regents Exam since the late 90’s.

However, a couple of years ago, a friend asked me to explain the reason The Bluest Eye, with its abuse and rape scenes, was included in Common Core selections, so I took a closer look. Basically, a right-wing blogger had excerpted lines and scenes from the novel to paint it as “smut” and child pornography, thus condemning the entire Common Core curriculum. My response to my friend ended up as “In Defense of The Bluest Eye.”

That’s when I started looking more closely at the Common Core curriculum. Learning about some of the challenges facing public schools around the country, I had to admit that having a required curriculum didn’t seem like a terrible idea. In fact, in a few cases, the Common Core felt less confining than what they’d had before. And you know, even in NYC, there were English departments that rarely taught women or minority writers. Without a strong leader in a department, there’s such a thing as too much autonomy. Just like a unit in a class, a school and a department should have a focus, a balance.

But your expertise is Mathematics, Eugene. What are your thoughts on the Common Core from that perspective?

ES: They’re a mix. There are aspects of the reforms that I agree with, aspects that I strongly disagree with, and then a bunch of stuff in between.

The main thing I agree with is that learning math should be centered on learning concepts rather than procedures. You should still learn procedures, but with a conceptual underpinning, so you understand what you’re doing. That’s not a new idea: it’s been in the air, and frustrating some parents, for 50 years or more. In the 1960’s, they called it New Math.

Back then, the reforms didn’t go so well because the concepts they were trying to teach were too abstract – too much set theory, in a nutshell, at least in the younger grades. So then there was a retrenchment, back to learning procedures. But these things seem to go in cycles, and now we’re trying to teach concepts better again. This time more flexibly, less abstractly, with more examples. At least that’s the hope, and I share that hope.

I also agree with your point about needing some common standards defining what gets taught at each grade level. You don’t want to be super-prescriptive, but you need to ensure some kind of consistency between schools. Otherwise, what happens when a kid switches schools? Math, especially, is such a cumulative subject that you really need to have some big picture consistency in how you teach it.

Assessment

ES: What I disagree with is the increased emphasis on standardized testing, especially the raised stakes of those tests. I want to see better, more consistent standards and curriculum, but I think that can and should happen without putting this very heavy and punitive assessment mechanism on top of it.

KW: Yes, claiming to want to assess ability (which is a good thing), but then connecting the results to a teacher’s effectiveness in that moment is insincere evaluation. And using a standardized test not created by the teacher with material not covered in class as a hard percentage of a teacher’s evaluation makes little sense. I understand that much of the exam is testing critical thinking, ability to reason and use logic, and so on. It’s not about specific content, and that’s fine. (I really do think that’s fine!) Linking teacher evaluations to it is not.

Students cannot be taught to think critically in six months. As you mentioned about the spiraling back to concepts, those skills need to be revisited again and again in different contexts. And I agree, tests needn’t be the main driver for raising standards and developing curriculum. But they can give a good read on overall strengths and weaknesses. And if PARCC is supposed to be about assessing student strengths and weaknesses, it should be informing adjustments in curriculum.

On a smaller scale, strong teachers and staffs are supposed to work as a team to influence the entire school and district with adjusted curriculum as well. With a wide reach like the Common Core, a worrying issue is that different parts of the USA will have varying needs to meet. Making adjustments for all based on such a wide collection of assessments is counterintuitive. Local districts (and the principals and teachers in them) need to have leeway with applying them to best suit their own students.

Even so, I do like some things about data driven curricula. Teachers and school administrators are some of the most empathetic and caring people there are, but they are still human, and biases exist. Teachers, guidance counselors, administrators can’t help but be affected by personal sympathies and peeves. Having a consistent assessment of skills can be very helpful for those students who sometimes fall through the cracks. Basically, standards: yes. Linking scores to teacher evaluation: no.

ES: Yes, I just don’t get the conventional wisdom that we can only tell that the reforms are working, at both the individual and group level, through standardized test results. It gives us some information, but it’s still just a proxy. A highly imperfect proxy at that, and we need to have lots of others.

I also really like your point that, as you’re rolling out national standards, you need some local assessment to help you see how those national standards are meeting local needs. It’s a safeguard against getting too cookie-cutter.

I think it’s incredibly important that, as you and I talk, we can separate changes we like from changes we don’t. One reason there’s so much noise and confusion now is that everything – standards, curriculum, testing – gets lumped together under “Common Core.” It becomes this giant kitchen sink that’s very hard to talk about in a rational way. Testing especially should be separated out because it’s fundamentally an issue of process, whereas standards and curriculum are really about content.

You take a guy like Cuomo in New York. He’s trying to increase the reliance on standardized tests in teacher evaluations, so that value added models based on test scores count for half of a teacher’s total evaluation. And he says stuff like this: “Everyone will tell you, nationwide, the key to education reform is a teacher evaluation system.” That’s from his State of the State address in January. He doesn’t care about making the content better at all. “Everyone” will tell you! I know for a fact that the people spending all their time figuring out at what grade level kids should start to learn about fractions aren’t going tell you that!

I couldn’t disagree with that guy more, but I’m not going to argue with him based on whether or not I like the problems my kids are getting in math class. I’m going to point out examples, which he should be well aware of by now, of how badly the models work. That’s a totally different discussion, about what we can model accurately and fairly and what we can’t.

So let’s have that discussion. Starting point: if you want to use test scores to evaluate teachers, you need a model because – I think everyone agrees on this – how kids do on a test depends on much more than how good their teacher was. There’s the talent of the kid, what preparation they got outside their teacher’s classroom, whether they got a good night’s sleep the night before, and a good breakfast, and lots of other things. As well as natural randomness: maybe the reading comprehension section was about DNA, and the kid just read a book about DNA last month. So you need a model to break out the impact of the teacher. And the models we have today, even the most state-of-the-art ones, can give you useful aggregate information, but they just don’t work at that level of detail. I’m saying this as a math person, and the American Statistical Association agrees. I’ve written about this here and here and here and here.

Having student test results impact teacher evaluations is my biggest objection to PARCC, by far.

KW: Yep. Can I just cut and paste what you’ve said? However, for me, another distasteful aspect is how technology is tangled up in the PARCC exam.

Technology

ES: Let me tell you the saddest thing I’ve heard all week. There’s a guy named Dan Meyer, who writes very interesting things about math education, both in his blog and on Twitter. He put out a tweet about a bunch of kids coming into a classroom and collectively groaning when they saw laptops on every desk. And the reason was that they just instinctively assumed they were either about to take a test or do test prep.

That feels like such a collective failure to me. Look, I work in technology, and I’m still optimistic that it’s going to have a positive impact on math education. You can use computers to do experiments, visualize relationships, reinforce concepts by having kids code them up, you name it. The new standards emphasize data analysis and statistics much more than any earlier standards did, and I think that’s a great thing. But using computers primarily as a testing tool is an enormous missed opportunity. It’s like, here’s the most amazing tool human beings have ever invented, and we’re going to use it primarily as a paperweight. And we’re going to waste class time teaching kids exactly how to use it as a paperweight. That’s just so dispiriting.

KW: That’s something that hardly occurred to me. My main objection to hosting the PARCC exam on computers – and giving preparation homework and assignments that MUST be done on a computer – is the unfairness inherent in accessibility. It’s one more way to widen the achievement gap that we are supposed to be minimizing. I wrote about it from one perspective here.

I’m sure there are some students who test better on a computer, but the playing field has to be evenly designed and aggressively offered. Otherwise, a major part of what the PARCC is testing is how accurately and quickly children use a keyboard. And in the aggregate, the group that will have scores negatively impacted will be children with less access to the technology used on the PARCC. That’s not an assessment we need to test to know. When I took the practice tests, I found some questions quite clear, but others were difficult not for content but in maneuvering to create a fraction or other concept. Part of that can be solved through practice and comfort with the technology, but then we return to what we’re actually testing.

ES: Those are both great points. The last thing you want to do is force kids to write math on a computer, because it’s really hard! Math has lots of specialized notation that’s much easier to write with pencil and paper, and learning how to write math and use that notation is a big part of learning the subject. It’s not easy, and you don’t want to put artificial obstacles in kids’ way. I want kids thinking about fractions and exponents and what they mean, and how to write them in a mathematical expression, but not worrying about how to put a numerator above a denominator or do a superscript or make a font smaller on a computer. Plus, why in the world would you limit what kids can express on a test to what they can input on a keyboard? A test is a proxy already, and this limits what it can capture even more.

I believe in using technology in education, but we’ve got the order totally backwards. Don’t introduce the computer as a device to administer tests, introduce it as a tool to help in the classroom. Use it for demos and experiments and illustrating concepts.

As far as access and fairness go, I think that’s another argument for using the computer as a teaching tool rather than a testing tool. If a school is using computers in class, then at least everyone has access in the classroom setting, which is a start. Now you might branch out from there to assignments that require a computer. But if that’s done right, and those assignments grow in an organic way out of what’s happening in the classroom, and they have clear learning value, then the school and the community are also morally obligated to make sure that everyone has access. If you don’t have a computer at home, and you need to do computer-based homework, then we have to get you computer access, after school hours, or at the library, or what have you. And that might actually level the playing field a bit. Whereas now, many computer exercises feel like they’re primarily there to get kids used to the testing medium. There isn’t the same moral imperative to give everybody access to that.

I really want to hear more about your experience with the PARCC practice tests, though. I’ve seen many social media threads about unclear questions, both in a testing context and more generally with the Common Core. It sounds like you didn’t think it was so bad?

KW: Well, “not so bad” in that I am a 45 year old who was really trying to take the practice exam honestly, but didn’t feel stressed about the results. However, I found the questions with fractions confusing in execution on the computer (I almost gave up), and some of the questions really had to be read more than once. Now, granted, I haven’t been exposed to the language and technique of the exam. That matters a lot. In the SAT, for example, if you don’t know the testing language and format it will adversely affect your performance. This is similar to any format of an exam or task, even putting together an IKEA nightstand.

There are mainly two approaches to preparation, and out of fear of failing, some school districts are doing hardcore test preparation – much like SAT preparation classes – to the detriment of content and skill-based learning. Others are not altering their classroom approaches radically; in fact, some teachers and parents have told me they hardly notice a difference. My unscientific observations point to a separation between the two that is lined in Socio-Economic Status. If districts feel like they are on the edge or have a lot to lose (autonomy, funding, jobs), if makes sense that they would be reactionary in dealing with the PARCC exam. Ironically, schools that treat the PARCC like a high-stakes test are the ones losing the most.

Opting Out

KW: Despite my misgivings, I’m not in favor of “opting out” of the test. I understand the frustration that has prompted the push some districts are experiencing, but there have been some compromises in New Jersey. I was glad to see that the NJ Assembly voted to put off using the PARCC results for student placement and teacher evaluations for three years. And I was relieved, though not thrilled, that the percentage of PARCC results to be used in teacher evaluations was lowered to 10% (and now put off). I still think it should not be a part of teacher evaluations, but 10% is an improvement.

Rather than refusing the exam, I’d prefer to see the PARCC in action and compare honest data to school and teacher-generated assessments in order to improve the assessment overall. I believe an objective state or national model is worth having; relying only on teacher-based assessment has consistency and subjective problems in many areas. And that goes double for areas with deeply disadvantaged students.

ES: Yes, NJ seems to be stepping back from the brink as far as model-driven teacher evaluation goes. I think I feel the same way you do, but if I lived in NY, where Cuomo is trying to bump up the weight of value added models in evaluations to 50%, I might very well be opting out.

Let me illustrate the contrast – NY vs. NJ, more test prep vs. less — with an example. My family is good friends with a family that lived in NYC for many years, and just moved to Montclair a couple months ago. Their older kid is in third grade, which is the grade level where all this testing starts. In their NYC gifted and talented public school, the test was this big, stressful thing, and it was giving the kid all kinds of test anxiety. So the mom was planning to opt out. But when they got to Montclair, the kid’s teacher was much more low key, and telling the kids not to worry. And once it became lower stakes, the kid wanted to take the test! The mom was still ambivalent, but she decided that here was an opportunity for her kid to get used to tests without anxiety, and that was the most important factor for her.

I’m trying to make two points here. One: whether or not you opt out depends on lots of factors, and people’s situations and priorities can be very different. We need to respect that, regardless of which way people end up going. Two: shame on us, as grown ups, for polluting our kids’ education with our anxieties! We need to stop that, and that extends both to the education policies we put in place and how we collectively debate those policies. I guess what I’m saying is: less noise, folks, please.

KW: Does this very long blog post count as noise, Eugene? I wonder how this will be assessed? There are so many other issues – private profits from public education, teacher autonomy in high performing schools, a lack of educational supplies and family support, and so on. But we have to start somewhere with civil and productive discourse, right? So, thank you for having the conversation.

ES: Kristin, I won’t try to predict anyone else’s assessment, but I will keep mine low stakes and say this has been a pleasure!

Guest post: Be more careful with the vagina stats in teaching

This is a guest post by Courtney Gibbons, an assistant professor of mathematics at Hamilton College. You can see her teaching evaluations on ratemyprofessor.com. She would like you to note that she’s been tagged as “hilarious.” Twice.

Lately, my social media has been blowing up with stories about gender bias in higher ed, especially course evaluations. As a 30-something, female math professor, I’m personally invested in this kind of issue. So I’m gratified when I read about well-designed studies that highlight the “vagina tax” in teaching (I didn’t coin this phrase, but I wish I had).

These kinds of studies bring the conversation about bias to the table in a way that academics can understand. We can geek out on experimental design, the fact that the research is peer-reviewed and therefore passes some basic legitimacy tests.

Indeed, the conversation finally moves out of the realm of folklore, where we have “known” for some time that students expect women to be nurturing in addition to managing the class, while men just need to keep class on track.

Let me reiterate: as a young woman in academia, I want deans and chairs and presidents to take these observed phenomena seriously when evaluating their professors. I want to talk to my colleagues and my students about these issues. Eventually, I’d like to “fix” them, or at least game them to my advantage. (Just kidding. I’d rather fix them.)

However, let me speak as a mathematician for a minute here: bad interpretations of data don’t advance the cause. There’s beautiful link-bait out there that justifies its conclusions on the flimsy “hey, look at this chart” understanding of big data. Benjamin M. Schmidt created a really beautiful tool to visualize data he scraped from the website ratemyprofessor.com through a process that he sketches on his blog. The best criticisms and caveats come from Schmidt himself.

What I want to examine is the response to the tool, both in the media and among my colleagues. USAToday, HuffPo, and other sites have linked to it, citing it as yet more evidence to support the folklore: students see men as “geniuses” and women as “bossy.” It looks like they found some screenshots (or took a few) and decided to interpret them as provocatively as possible. After playing with the tool for a few minutes, which wasn’t even hard enough to qualify as sleuthing, I came to a very different conclusion.

If you look at the ratings for “genius” and then break them down further to look at positive and negative reviews separately, it occurs predominantly in negative reviews. I found a few specific reviews, and they read, “you have to be a genius to pass” or along those lines.

[Don’t take my word for it — search google for:

rate my professors “you have to be a genius”‘

and you’ll see how students use the word “genius” in reviews of professors. The first page of hits is pretty much all men.]

Here’s the breakdown for “genius”:

So yes, the data shows that students are using the word “genius” in more evaluations of men than women. But there’s not a lot to conclude from this; we can’t tell from the data if the student is praising the professor or damning him. All we can see that it’s a word that occurs in negative reviews more often than positive ones. From the data, we don’t even know if it refers to the professor or not.

Similar results occur with “brilliant”:

Now check out “bossy” and negative reviews:

Okay, wow, look at how far to the right those orange dots are… and now look at the x-axis. We’re talking about fewer than 5 uses per million words of text. Not exactly significant compared to some of the other searches you can do.

I thought that the phrase “terrible teacher” was more illuminating, because it’s more likely in reference to the subject of the review, and we’ve got some meaningful occurrences:

And yes, there is a gender imbalance, but it’s not as great as I had feared. I’m more worried about the disciplinary break down, actually. Check out math — we have the worst teachers, but we spread it out across genders, with men ranking 187 uses of “terrible teacher” per million words; women score 192. Compare to psychology, where profs receive a score of 110. Ouch.

Who’s doing this reporting, and why aren’t we reading these reports more critically? Journalists, get your shit together and report data responsibly. Academics, be a little more skeptical of stories that simply post screenshots of a chart coupled with inciting prose from conclusions drawn, badly, from hastily scanned data.

Is this tool useless? No. Is it fun to futz around with? Yes.

Is it being reported and understood well? Resounding no!

I think even our students would agree with me: that’s just f*cked up.

Nerd catcalling

This is a guest post by Becky Jaffe.

It has come to my attention that I am a nerd. I take this on good authority from my students, my friends, and, as of this morning, strangers in a coffee shop. I was called a nerd three times today before 10:30 am, while I was standing in line for coffee – which is to say, before I was caffeinated, and therefore utterly defenseless. I asked my accusers for suggestions on how to be less nerdy. Here was their helpful advice:

Guy in coffee shop: “Wear makeup and high heels.”

Another helpful interlocutor: “Use smaller words.”

My student, later in the day: “Care less about ideas.”

A friend: “Think less like NPR and more like C-SPAN.”

What I wish someone had said: “Is that a dictionary in your pocket or are you happy to see me?”

What I learned today is that if I want to avoid being called a nerd, I should be more like Barbie. And I don’t mean the Professor Barbie version, which – get this – does not exist. When I googled “Professor Barbie,” I got “Fashion Professor Barbie.”

So many lessons in gender conformity for one day! This nerd is taking notes.

Guest post: Clustering and predicting NYC taxi activity

This is a guest post by Deepak Subburam, a data scientist who works at Tessellate.

from NYCTaxi.info

Greetings fellow Mathbabers! At Cathy’s invitation, I am writing here about NYCTaxi.info, a public service web app my co-founder and I have developed. It overlays on a Google map around you estimated taxi activity, as expected number of passenger pickups and dropoffs this current hour. We modeled these estimates from the recently released 2013 NYC taxi trips dataset comprising 173 million trips, the same dataset that Cathy’s post last week on deanonymization referenced. Our work will not help you stalk your favorite NYC celebrity, but guide your search for a taxi and maybe save some commute time. My writeup below shall take you through the four broad stages our work proceeded through: data extraction and cleaning , clustering, modeling, and visualization.

We extract three columns from the data: the longitude and latitude GPS coordinates of the passenger pickup or dropoff location, and the timestamp. We make no distinction between pickups and dropoffs, since both of these events imply an available taxicab at that location. The data was generally clean, with a very small fraction of a percent of coordinates looking bad, e.g. in the middle of the Hudson River. These coordinate errors get screened out by the clustering step that follows.

We cluster the pickup and dropoff locations into areas of high density, i.e. where many pickups and dropoffs happen, to determine where on the map it is worth making and displaying estimates of taxi activity. We rolled our own algorithm, a variation on heatmap generation, after finding existing clustering algorithms such as K-means unsuitable—we are seeking centroids of areas of high density rather than cluster membership per se. See figure below which shows the cluster centers as identified by our algorithm on a square-mile patch of Manhattan. The axes represent the longitude and latitude of the area; the small blue crosses a random sample of pickups and dropoffs; and the red numbers the identified cluster centers, in descending order of activity.

Taxi activity clusters

We then model taxi activity at each cluster. We discretize time into hourly intervals—for each cluster, we sum all pickups and dropoffs that occur each hour in 2013. So our datapoints now are triples of the form [<cluster>, <hour>, <activity>], with <hour> being some hour in 2013 and <activity> being the number of pickups and dropoffs that occurred in hour <hour> in cluster <cluster>. We then regress each <activity> against neighboring clusters’ and neighboring times’ <activity> values. This regression serves to smooth estimates across time and space, smoothing out effects of special events or weather in the prior year that don’t repeat this year. It required some tricky choices on arranging and aligning the various data elements; not technically difficult or maybe even interesting, but nevertheless likely better part of an hour at a whiteboard to explain. In other words, typical data science. We then extrapolate these predictions to 2014, by mapping each hour in 2014 to the most similar hour in 2013. So we now have a prediction at each cluster location, for each hour in 2014, the number of passenger pickups and dropoffs.

We display these predictions by overlaying them on a Google maps at the corresponding cluster locations. We round <activity> to values like 20, 30 to avoid giving users number dyslexia. We color the labels based on these values, using the black body radiation color temperatures for the color scale, as that is one of two color scales where the ordering of change is perceptually intuitive.

If you live in New York, we hope you find NYCTaxi.info useful. Regardless, we look forward to receiving any comments.

![]()

Guest post: The dangers of evidence-based sentencing

This is a guest post by Luis Daniel, a research fellow at The GovLab at NYU where he works on issues dealing with tech and policy. He tweets @luisdaniel12. Crossposted at the GovLab.

What is Evidence-based Sentencing?

For several decades, parole and probation departments have been using research-backed assessments to determine the best supervision and treatment strategies for offenders to try and reduce the risk of recidivism. In recent years, state and county justice systems have started to apply these risk and needs assessment tools (RNA’s) to other parts of the criminal process.

Of particular concern is the use of automated tools to determine imprisonment terms. This relatively new practice of applying RNA information into the sentencing process is known as evidence-based sentencing (EBS).

What the Models Do

The different parameters used to determine risk vary by state, and most EBS tools use information that has been central to sentencing schemes for many years such as an offender’s criminal history. However, an increasing amount of states have been utilizing static factors such as gender, age, marital status, education level, employment history, and other demographic information to determine risk and inform sentencing. Especially alarming is the fact that the majority of these risk assessment tools do not take an offender’s particular case into account.

This practice has drawn sharp criticism from Attorney General Eric Holder who says “using static factors from a criminal’s background could perpetuate racial bias in a system that already delivers 20% longer sentences for young black men than for other offenders.” In the annual letter to the US Sentencing Commission, the Attorney General’s Office states that “utilizing such tools for determining prison sentences to be served will have a disparate and adverse impact on offenders from poor communities already struggling with social ills.” Other concerns cite the probable unconstitutionality of using group-based characteristics in risk assessments.

Where the Models Are Used

It is difficult to precisely quantify how many states and counties currently implement these instruments, although at least 20 states have implemented some form of EBS. Some of the states or states with counties that have implemented some sort of EBS (any type of sentencing: parole, imprisonment, etc) are: Pennsylvania, Tennessee, Vermont, Kentucky, Virginia, Arizona, Colorado, California, Idaho, Indiana, Missouri, Nebraska, Ohio, Oregon, Texas, and Wisconsin.

The Role of Race, Education, and Friendship

Overwhelmingly states do not include race in the risk assessments since there seems to be a general consensus that doing so would be unconstitutional. However, even though these tools do not take race into consideration directly, many of the variables used such as economic status, education level, and employment correlate with race. African-Americans and Hispanics are already disproportionately incarcerated and determining sentences based on these variables might cause further racial disparities.

The very socioeconomic characteristics such as income and education level used in risk assessments are the characteristics that are already strong predictors of whether someone will go to prison. For example, high school dropouts are 47 times more likely to be incarcerated than people in their similar age group who received a four-year college degree. It is reasonable to suspect that courts that include education level as a risk predictor will further exacerbate these disparities.

Some states, such as Texas, take into account peer relations and considers associating with other offenders as a “salient problem”. Considering that Texas is in 4th place in the rate of people under some sort of correctional control (parole, probation, etc) and that the rate is 1 in 11 for black males in the United States it is likely that this metric would disproportionately affect African-Americans.

Sonja Starr’s paper

Even so, in some cases, socioeconomic and demographic variables receive significant weight. In her forthcoming paper in the Stanford Law Review, Sonja Starr provides a telling example of how these factors are used in presentence reports. From her paper:

For instance, in Missouri, pre-sentence reports include a score for each defendant on a scale from -8 to 7, where “4-7 is rated ‘good,’ 2-3 is ‘above average,’ 0-1 is ‘average’, -1 to -2 is ‘below average,’ and -3 to -8 is ‘poor.’ Unlike most instruments in use, Missouri’s does not include gender. However, an unemployed high school dropout will score three points worse than an employed high school graduate—potentially making the difference between “good” and “average,” or between “average” and “poor.” Likewise, a defendant under age 22 will score three points worse than a defendant over 45. By comparison, having previously served time in prison is worth one point; having four or more prior misdemeanor convictions that resulted in jail time adds one point (three or fewer adds none); having previously had parole or probation revoked is worth one point; and a prison escape is worth one point. Meanwhile, current crime type and severity receive no weight.

Starr argues that such simple point systems may “linearize” a variable’s effect. In the underlying regression models used to calculate risk, some of the variable’s effects do not translate linearly into changes in probability of recidivism, but they are treated as such by the model.

Another criticism Starr makes is that they often make predictions on an individual based on averages of a group. Starr says these predictions can predict with reasonable precision the average recidivism rate for all offenders who share the same characteristics as the defendant, but that does not make it necessarily useful for individual predictions.

The Future of EBS Tools

The Model Penal Code is currently in the process of being revised and is set to include these risk assessment tools in the sentencing process. According to Starr, this is a serious development because it reflects the increased support of these practices and because of the Model Penal Code’s great influence in guiding penal codes in other states. Attorney General Eric Holder has already spoken against the practice, but it will be interesting to see whether his successor will continue this campaign.

Even if EBS can accurately measure risk of recidivism (which is uncertain according to Starr), does that mean that a greater prison sentence will result in less future offenses after the offender is released? EBS does not seek to answer this question. Further, if knowing there is a harsh penalty for a particular crime is a deterrent to commit said crime, wouldn’t adding more uncertainty to sentencing (EBS tools are not always transparent and sometimes proprietary) effectively remove this deterrent?

Even though many questions remain unanswered and while several people have been critical of the practice, it seems like there is great support for the use of these instruments. They are especially easy to support when they are overwhelmingly regarded as progressive and scientific, something Starr refutes. While there is certainly a place for data analytics and actuarial methods in the criminal justice system, it is important that such research be applied with the appropriate caution. Or perhaps not at all. Even if the tools had full statistical support, the risk of further exacerbating an already disparate criminal justice system should be enough to halt this practice.

Both Starr and Holder believe there is a strong case to be made that the risk prediction instruments now in use are unconstitutional. But EBS has strong advocates, so it’s a difficult subject. Ultimately, evidence-based sentencing is used to determine a person’s sentencing not based on what the person has done, but who that person is.

Guest post: New Federal Banking Regulations Undermine Obama Infrastructure Stance

This is a guest post by Marc Joffe, a former Senior Director at Moody’s Analytics, who founded Public Sector Credit Solutions in 2011 to educate the public about the risk – or lack of risk – in government securities. Marc published an open source government bond rating tool in 2012 and launched a transparent credit scoring platform for California cities in 2013. Currently, Marc blogs for Bitvore, a company which sifts the internet to provide market intelligence to municipal bond investors.

Obama administration officials frequently talk about the need to improve the nation’s infrastructure. Yet new regulations published by the Federal Reserve, FDIC and OCC run counter to this policy by limiting the market for municipal bonds.

On Wednesday, bank regulators published a new rule requiring large banks to hold a minimum level of high quality liquid assets (HQLAs). This requirement is intended to protect banks during a financial crisis, and thus reduce the risk of a bank failure or government bailout. Just about everyone would agree that that’s a good thing.

The problem is that regulators allow banks to use foreign government securities, corporate bonds and even stocks as HQLAs, but not US municipal bonds. Unless this changes, banks will have to unload their municipal holdings and won’t be able to purchase new state and local government bonds when they’re issued. The new regulation will thereby reduce the demand for bonds needed to finance roads, bridges, airports, schools and other infrastructure projects. Less demand for these bonds will mean higher interest rates.

Municipal bond issuance is already depressed. According to data from SIFMA, total municipal bonds outstanding are lower now than in 2009 – and this is in nominal dollar terms. Scary headlines about Detroit and Puerto Rico, rating agency downgrades and negative pronouncements from market analysts have scared off many investors. Now with banks exiting the market, the premium that local governments have to pay relative to Treasury bonds will likely increase.

If the new rule had limited HQLA’s to just Treasuries, I could have understood it. But since the regulators are letting banks hold assets that are as risky as or even riskier than municipal bonds, I am missing the logic. Consider the following:

- No state has defaulted on a general obligation bond since 1933. Defaults on bonds issued by cities are also extremely rare – affecting about one in one thousand bonds per year. Other classes of municipal bonds have higher default rates, but not radically different from those of corporate bonds.

- Bonds issued by foreign governments can and do default. For example, private investors took a 70% haircut when Greek debt was restructured in 2012.

- Regulators explained their decision to exclude municipal bonds because of thin trading volumes, but this is also the case with corporate bonds. On Tuesday, FINRA reported a total of only 6446 daily corporate bond trades across a universe of perhaps 300,000 issues. So, in other words, the average corporate bond trades less than once per day. Not very liquid.

- Stocks are more liquid, but can lose value very rapidly during a crisis as we saw in 1929, 1987 and again in 2008-2009. Trading in individual stocks can also be halted.

Perhaps the most ironic result of the regulation involves municipal bond insurance. Under the new rules, a bank can purchase bonds or stock issued by Assured Guaranty or MBIA – two major municipal bond insurers – but they can’t buy state and local government bonds insured by those companies. Since these insurance companies would have to pay interest and principal on defaulted municipal securities before they pay interest and dividends to their own investors, their securities are clearly more risky than the insured municipal bonds.

Regulators have expressed a willingness to tweak the new HQLA regulations now that they are in place. I hope this is one area they will reconsider. Mandating that banks hold safe securities is a good thing; now we need a more data-driven definition of just what safe means. By including municipal securities in HQLA, bank regulators can also get on the same page as the rest of the Obama administration.

Guest Post: Bring Back The Slide Rule!

This is a guest post by Gary Cornell, a mathematician, writer, publisher, and recent founder of StemForums.

I was was having a wonderful ramen lunch with the mathbabe and, as is all too common when two broad minded Ph.D.’s in math get together, we started talking about the horrible state math education is in for both advanced high school students and undergraduates.

One amusing thing we discovered pretty quickly is that we had independently come up with the same (radical) solution to at least part of the problem: throw out the traditional sequence which goes through first and second year calculus and replace it with a unified probability, statistics, calculus course where the calculus component was only for the smoothest of functions and moreover the applications of calculus are only to statistics and probability. Not only is everything much more practical and easier to motivate in such a course, students would hopefully learn a skill that is essential nowadays: how to separate out statistically good information from the large amount of statistical crap that is out there.

Of course, the downside is that the (interesting) subtleties that come from the proofs, the study of non-smooth functions and for that matter all the other stuff interesting to prospective physicists like DiffEQ’s would have to be reserved for different courses. (We also were in agreement that Gonick’s beyond wonderful“Cartoon Guide To Statistics” should be required reading for all the students in these courses, but I digress…)

The real point of this blog post is based on what happened next: but first you have to know I’m more or less one generation older than the mathbabe. This meant I was both able and willing to preface my next point with the words: “You know when I was young, in one way students were much better off because…” Now it is well known that using this phrase to preface a discussion often poisons the discussion but occasionally, as I hope in this case, some practices from days gone by ago can if brought back, help solve some of today’s educational problems.

By the way, and apropos of nothing, there is a cure for people prone to too frequent use of this phrase: go quickly to YouTube and repeatedly make them watch Monty Python’s Four Yorkshireman until cured:

Anyway, the point I made was that I am a member of the last generation of students who had to use slide rules. Another good reference is: here. Both these references are great and I recommend them. (The latter being more technical.) For those who have never heard of them, in a nutshell, a slide rule is an analog device that uses logarithms under the hood to do (sufficiently accurate in most cases) approximate multiplication, division, roots etc.

The key point is that using a slide rule requires the user to keep track of the “order of magnitude” of the answers— because slide rules only give you four or so significant digits. This meant students of my generation when taking science and math courses were continuously exposed to order of magnitude calculations and you just couldn’t escape from having to make order of magnitude calculations all the time—students nowadays, not so much. Calculators have made skill at doing order of magnitude calculations (or Fermi calculations as they are often lovingly called) an add-on rather than a base line skill and that is a really bad thing. (Actually my belief that bringing back slide rules would be a good thing goes back a ways: when that when I was a Program Director at the NSF in the 90’s, I actually tried to get someone to submit a proposal which would have been called “On the use of a hand held analog device to improve science and math education!” Didn’t have much luck.)

Anyway, if you want to try a slide rule out, alas, good vintage slide rules have become collectible and so expensive— because baby boomers like me are buying the ones we couldn’t afford when we were in high school – but the nice thing is there are lots of sites like this one which show you how to make your own.

Finally, while I don’t think they will ever be as much fun as using a slide rule, you could still allow calculators in classrooms.

Why? Because it would be trivial to have a mode in the TI calculator or the Casio calculator that all high school students seem to use, called “significant digits only.” With the right kind of problems this mode would require students to do order of magnitude calculations because they would never be able to enter trailing or leading zeroes and we could easily stick them with problems having a lot of them!

But calculators really bug me in classrooms and, so I can’t resist pointing out one last flaw in their omnipresence: it makes students believe in the possibility of ridiculously high precision results in the real world. After all, nothing they are likely to encounter in their work (and certainly not in their lives) will ever need (or even have) 14 digits of accuracy and, more to the point, when you see a high precision result in the real world, it is likely to be totally bogus when examined under the hood.

A simple mathematical model of congressional geriatric penis pumps

This is a guest post written by Stephanie Yang and reposted from her blog. Stephanie and I went to graduate school at Harvard together. She is now a quantitative analyst living in New York City, and will be joining the data science team at Foursquare next month.

Last week’s hysterical report by the Daily Show’s Samantha Bee on federally funded penis pumps contained a quote which piqued our quantitative interest. Listen carefully at the 4:00 mark, when Ilyse Hogue proclaims authoritatively:

Last week’s hysterical report by the Daily Show’s Samantha Bee on federally funded penis pumps contained a quote which piqued our quantitative interest. Listen carefully at the 4:00 mark, when Ilyse Hogue proclaims authoritatively:

“Statistics show that probably some our members of congress have a vested interested in having penis pumps covered by Medicare!”

Ilya’s wording is vague, and intentionally so. Statistically, a lot of things are “probably” true, and many details are contained in the word “probably”. In this post we present a simple statistical model to clarify what Ilya means.

First we state our assumptions. We assume that penis pumps are uniformly distributed among male Medicare recipients and that no man has received two pumps. These are relatively mild assumptions. We also assume that what Ilya refers to as “members of Congress [with] a vested interested in having penis pumps covered by Medicare,” specifically means male member of congress who received a penis pump covered by federal funds. Of course, one could argue that female members congress could also have a vested interested in penis pumps as well, but we do not want to go there.

Now the number crunching. According to the report, Medicare has spent a total of $172 million supplying penis pumps to recipients, at “360 bucks a pop.” This means a total of 478,000 penis pumps bought from 2006 to 2011.

45% of the current 49,435,610 Medicare recipients are male. In other words, Medicare bought one penis pump for every 46.5 eligible men. Inverting this, we can say that 2.15% of male Medicare recipients received a penis pump.

There are currently 128 members of congress (32 senators plus 96 representatives) who are males over the age of 65 and therefore Medicare-eligible. The probability that none of them received a federally funded penis pump is:

In other words, the chances of at least one member of congress having said penis pumps is 93.8%, which is just shy of the 95% confidence that most statisticians agree on as significant. In order to get to 95% confidence, we need a total of 138 male members of congress who are over the age of 65, and this has not happened yet as of 2014. Nevertheless, the estimate is close enough for us to agree with Ilya that there is probably someone member of congress who has one.

Is it possible that there two or more penis pump recipients in congress? We did notice that Ilya’s quote refers to plural members of congress. Under the assumptions laid out above, the probability of having at least two federally funded penis pumps in congress is:

Again, we would say this is probably true, though not nearly with the same amount of confidence as before. In order to reach 95% confidence that there are two or moreq congressional federally funded penis pump, we would need 200 or more Medicare-eligible males in congress, which is unlikely to happen anytime soon.

Note: As a corollary to these calculations, I became the first developer in the history of mankind to type the following command: git merge --squash penispump.

Guest rant about rude kids

Today’s guest post was written by Amie, who describes herself as a mom of a 9 and a 14-year-old, mathematician, and bigmouth.

Nota bene: this was originally posted on Facebook as a spontaneous rant. Please don’t miscontrue it as an academic argument.

Time for a rant. I’ll preface this by saying that while my kids are creative, beautiful souls, so are many (perhaps all) children I’ve met, and it would be the height of arrogance to take credit for that as a parent. But one thing my husband and I can take credit for are their good manners, because that took work to develop.

The first phrase I taught me daughter was “thank you,” and it’s been put to good use over the years. I’m also loathe to tell other parents what to do, but this is an exception: teach your fucking kids to say “please” and “thank you”. If you are fortunate to visit another country, teach them to say “please” and “thank you” in the native language.

After a week in paradise at a Club Med in Mexico, I’m at some kind of breaking point with rude rich people and their spoiled kids. And that includes the Europeans. Maybe especially the Europeans. What is it that when you’re in France everyone’s all “thank you and have a nice day” but when these petit bourgeois assholes come to Cancun they treat Mexicans like nonhumans? My son held the door for a face-lifted Russian lady today who didn’t even say thank you.

Anyway, back to kids: I’m not saying that you should suppress your kids’ nature joie de vivre and boisterous, rambunctious energy (though if that’s what they’re like, please keep them away from adults who are not in the mood for it). Just teach them to treat other people with basic respect and courtesy. That means prompting them to say “please,” “thank you,” and “nice to meet you” when they interact with other people.

Jordan Ellenberg just posted how a huge number of people accepted to the math Ph.D. program at the University of Wisconsin never wrote to tell him that they had accepted other offers. When other people are on a wait list!

Whose fault is this? THE PARENTS’ FAULT. Damn parents. Come on!!

P.S. Those of you who have put in the effort to raise polite kids: believe me, I’ve noticed. So has everyone else.

Ya’ make your own luck, n’est-ce pas?

This is a guest post by Leopold Dilg.

There’s little chance we can underestimate our American virtues, since our overlords so seldom miss an opportunity to point them out. A case in point – in fact, le plus grand du genre, though my fingers tremble as I type that French expression, for reasons I’ll explain soon enough – is the Cadillac commercial that interrupted the broadcast of the Olympics every few minutes.

A masterpiece of casting and directing and location scouting, the ad follows a middle-aged man, muscular enough but not too proud to show a little paunch – manifestly a Master of the Universe – strutting around his chillingly modernist $10 million vacation house (or is it his first or fifth home? no matter), every pore oozing the manly, smirky bearing that sent Republican country-club women swooning over W.

It starts with Our Hero, viewed from the back, staring down his infinity pool. He pivots and stares down the viewer. He shows himself to be one of the more philosophical species of the MotU genus. “Why do we work so hard?” he puzzles. “For this? For stuff?….” We’re thrown off balance: Will this son of Goldman Sachs go all Walden Pond on us? Fat chance.

Now, still barefooted in his shorts and polo shirt, he’s prowling his sleak living room (his two daughters and stay-at-home wife passively reading their magazines and ignoring the camera, props in his world no less than his unused pool and The Car yet to be seen) spitting bile at those foreign pansies who “stop by the café” after work and “take August off!….OFF!” Those French will stop at nothing.

“Why aren’t YOU like that,” he says, again staring us down and we yield to the intimidation. (Well gee, sir, of course I’m not. Who wants a month off? Not me, absolutely, no way.) “Why aren’t WE like that” he continues – an irresistible demand for totalizing merger. He’s got us now, we’re goose-stepping around the TV, chanting “USA! USA! No Augusts off! No Augusts off!”

No, he sneers, we’re “crazy, hardworking believers.” But those Frogs – the weaklings who called for a double-check about the WMDs before we Americans blasted Iraqi children to smithereens (woops, someone forgot to tell McDonalds, the official restaurant of the U.S. Olympic team, about the Freedom Fries thing; the offensive French Fries are THERE, right in our faces in the very next commercial, when the athletes bite gold medals and the awe-struck audience bites chicken nuggets, the Lunch of Champions) – might well think we’re “nuts.”

“Whatever,” he shrugs, end of discussion, who cares what they think. “Were the Wright Brothers insane? Bill Gates? Les Paul?… ALI?” He’s got us off-balance again – gee, after all, we DO kinda like Les Paul’s guitar, and we REALLY like Ali.

Of course! Never in a million years would the hip jazz guitarist insist on taking an August holiday. And the imprisoned-for-draft-dodging boxer couldn’t possibly side with the café-loafers on the WMD thing. Gee, or maybe…. But our MotU leaves us no time for stray dissenting thoughts. Throwing lunar dust in our eyes, he discloses that WE were the ones who landed on the moon. “And you know what we got?” Oh my god, that X-ray stare again, I can’t look away. “BORED. So we left.” YEAH, we’re chanting and goose-stepping again, “USA! USA! We got bored! We got bored!”

Gosh, I think maybe I DID see Buzz Aldrin drumming his fingers on the lunar module and looking at his watch. “But…” – he’s now heading into his bedroom, but first another stare, and pointing to the ceiling – “…we got a car up there, and left the keys in it. You know why? Because WE’re the only ones goin’ back up there, THAT’s why.” YES! YES! Of COURSE! HE’S going back to the moon, I’M going back to the moon, YOU’RE going back to the moon, WE’RE ALL going back to the moon. EVERYONE WITH A U.S. PASSPORT is going back to the moon!!

Damn, if only the NASA budget wasn’t cut after all that looting by the Wall Street boys to pay for their $10 million vacation homes, WE’D all be going to get the keys and turn the ignition on the rover that’s been sitting 45 years in the lunar garage waiting for us. But again – he must be reading our mind – he’s leaving us no time for dissent, he pops immediately out of his bedroom in his $12,000 suit, gives us the evil eye again, yanks us from the edge of complaint with a sharp, “But I digress!” and besides he’s got us distracted with the best tailoring we’ve ever seen.

Finally, he’s out in the driveway, making his way to the shiny car that’ll carry him to lower Manhattan. (But where’s the chauffer? And don’t those MotUs drive Mazerattis and Bentleys? Is this guy trying to pull one over on the suburban rubes who buy Cadillacs stupidly thinking they’ve made it to the big time?)

Now the climax: “You work hard, you create your own luck, and you gotta believe anything is possible,” he declaims.

Yes, we believe that! The 17 million unemployed and underemployed, the 47 million who need food stamps to keep from starving, the 8 million families thrown out of their homes – WE ALL BELIEVE. From all the windows in the neighborhood, from all the apartments across Harlem, from Sandy-shattered homes in Brooklyn and Staten Island, from the barren blast furnaces of Bethlehem and Youngstown, from the foreclosed neighborhoods in Detroit and Phoenix, from the 70-year olds doing Wal-mart inventory because their retirement went bust, from all the kitchens of all the families carrying $1 trillion in college debt, I hear the national chant, “YOU MAKE YOUR OWN LUCK! YOU MAKE YOUR OWN LUCK!”

And finally – the denouement – from the front seat of his car, our Master of the Universe answers the question we’d all but forgotten. “As for all the stuff? That’s the upside of taking only two weeks off in August.” Then the final cold-blooded stare and – too true to be true – a manly wink, the kind of wink that makes us all collaborators and comrades-in-arms, and he inserts the final dagger: “N’est-ce pas?”

N’est-ce pas?

Guest Post: Beauty, even in the teaching of mathematics

This is a guest post by Manya Raman-Sundström.

Mathematical Beauty

If you talk to a mathematician about what she or he does, pretty soon it will surface that one reason for working those long hours on those difficult problems has to do with beauty.

Whatever we mean by that term, whether it is the way things hang together, or the sheer simplicity of a result found in a jungle of complexity, beauty – or aesthetics more generally—is often cited as one of the main rewards for the work, and in some cases the main motivating factor for doing this work. Indeed, the fact that a proof of known theorem can be published just because it is more elegant is one evidence of this fact.