Archive

Interview with Bill McCallum, lead writer of Math Common Core

This is an interview I had on 2/4/2014 with Bill McCallum, who is a University Distinguished Professor of Mathematics and member of the Department of Mathematics at the University of Arizona. Bill also led the Work Team which recently wrote the Mathematics Common Core State Standards, and was graciously willing to let me interview him on that.

Q: Tell me about how the Common Core State Standards Mathematics Work Team got formed and how you got onto it.

A: There were actually two separate documents and two separate processes, and people often get confused between them.

The first part happened in the summer of 2009 and produced a document called “College and Career Readiness Standards“. It didn’t go grade by grade but rather described what a high school student leaving and ready for college and career looks like. The team that wrote that pre-cursor document consisted of people from the College Board, ACT, and Achieve and was organized and managed by CCSSO (which represents state education commissioners and the like) and NGA (which represents Governors). Gene Wilhout, then executive director of CCSSO, led the charge.

I was on that first panel representing Achieve. Achieve does not write assessments, but College Board and ACT do, and I think that’s where the charge that the standards were developed by testing companies comes from. It’s worth noting in the context of that charge that both ACT and College Board are non-profits.

The second part of the process, called the Work Team, took that document and worked backwards to create the actual Common Core State Standards for mathematics. I was the chair of the Work Team and was one of the 3 lead writers, the other two being Jason Zimba and Phil Daro. But the other members of the Work Team represent many educators, mathematicians, and math education folks, as well as DOE folks, and importantly there are no testing or textbook companies represented. The full list is here.

Q: Explain what Achieve is and is not.

A: Achieve is not a testing company, so let’s put that to rest.

Without going into too much historical detail (some of which is available here), Achieve was launched as an initiative of a combination of business and education leaders with the goal to improve education. It’s a non-profit think tank, which came out with benchmarks for mathematics education and tried to get states to align standards to them.

I started working with Achieve around 2005 and pretty soon I found myself chairing a committee to revise the benchmarks, which is how I got involved in the drafting of the first document I talked about above.

One more word about getting states on board with the Common Core State Standards (CCSS). There were 48 states that had committed to being involved in the writing, but not necessarily to adopt the standards. The states were involved in the review process as the CCSS were being written in 2009-2010. And here by “states” we usually mean teams from the various Department of Education, but different states had different team makeups.

For example, Arizona heavily involved teachers and some other states had their mathematics specialists at the DOE look things over and make comments.The American Federation of Teachers took the review quite seriously, and Jason and I met twice over the weekend to talk to teams of teachers assembled by AFT, listening to comments and making revisions.The National Council of Teachers of Mathematics was also quite involved in reviews and meetings.

Q: What are the goals of CCSS?

A: The goal is educational: to describe the mathematical achievements, skills, and understandings that we want students to have by the time they leave high school, broken down by grades.

It’s important to note at this point that this is not a new idea. Indeed states have had standards since the early 1990’s. But those standards were pretty unfocused and incoherent in many cases. What’s new is that we have common standards, and that they are focused and coherent.

Q: So what’s the difference between standards and tests?

A: Standards are descriptions of what you want students to learn. What you do with them is up to you. Testing is something you do if you want to know if they’ve learned what you wanted them to learn. It’s assessment.

Q: What’s your view on tests?

A: I would say that it doesn’t make sense to have no tests, no assessments. It doesn’t make sense to spend the money we spend on education ($12000 per student each year) and then not bother to see if it has had an effect. But nobody tests as much as the United States, and it seems quite overdone. This is a legacy of the No Child Left Behind bill, which had punitive measures for schools based on assessments embedded into law.

From my perspective, education in this country goes between extremes, and right now we are undeniably on the extreme with respect to testing. But I’d like to be clear that standards don’t cause testing.

Q: OK, but it’s undeniable that CC makes testing easier, do you agree?

A: Yes, and isn’t that a good thing? Having common standards also makes good testing easier. I’d also argue that they make it possible to spend less money on testing, and to make testing more centered on what you actually want. It puts more, not less, power into the hands of the consumers of the test. And that’s a good thing.

A word about testing companies. There’s no question that testing companies are trying to grab their share of money for tests. But before they could get paid for 50 different tests based on 50 different standards. What’s better?

There are two new assessment consortia, groups of states which are developing common assessments based on the standards. The consortia will have more power in the marketplace than individual states had.

I believe that people are conflating two separate issues which I’d like to separate. First, do we do a good job of choosing tests? Second, do the CCSS make that worse?

I believe that the CCSS have the power to make things better, although it’s possible that nobody will take advantage of the “commonness” in CCSS. And I’m not saying I’m not worried – the assessment consortia might do a good job but they might fail or get caught up in politics. The campaign for teacher accountability is causing fear and anger. I think you are right to be suspicious of VAM, for example. But that’s not caused by CCSS. Having common standards gives us power if we use it.

I’d also like to make the point that having common standards helps gives power to small players in curriculum publishing. When 50 different states had 50 different standards, the big publishing players with huge sales forces were able to send people to every state and adapt books to different standards. But now we will have smallish companies able to make something work and prove their worth in Tennessee and then sell it in California or wherever.

Q: What would you say to the people who might say that we don’t need more tests, we need to address poverty?

A: I’d say that having good standards can help.

Look, we need a good education system and to eliminate poverty. And having good common standards helps that second goal as well as the first. Why do I say that? A lot of what is good about the CCSS is that they are pretty focused, whereas many of the complaints about the old state standards were that they had tended to be “mile wide inch deep,” meaning having laundry lists of skills which were overall unfocused and incoherent.

We wanted to make something focused, which translated into having fewer things per grade level and doing them right, and making the overall standards a progression which tells a story that makes sense. Good standards, as I believe the CCSS represent, help everybody by providing clear guidance, which particularly helps struggling students and poor schools with less than ideal conditions.

Q: Do CC standards make teachers passive? Are they sufficiently flexible?

A: I don’t even get that.

Here’s the thing, standards are not curriculum.

Curriculum is what teachers actually follow in the classroom. We’ve always had standards, so what changed? Why are we suddenly worried about this new concept which isn’t new at all?

Here’s a legitimate fear: regimented, overly-prescriptive curricula that tell you what to do every day, like in France. Fair enough. But standards don’t say you need to have that. They just say what we want students to learn. It’s true that an overzealous implementation of standards could make teachers passive.

Maybe what’s new is that previously most people ignored their state standards and now people are actually paying attention. But that still doesn’t imply boring or rigid curricula.

Q: Are the CCSS “alive”?

A: How living do we want CC standards to be? Countries like Singapore revise standards on a 10-year cycle. After all we don’t want it to move too quickly, since we need to have time to implement stuff. In fact I’d argue that instability has been a big problem: there’s always a new fad, a new thing, and people never get a chance to figure out what we’re doing with what we’ve got. We should study what works and what doesn’t. And of course there should be revisions when that makes sense.

Q: Is there anything else you’d like to say?

A: Two things. First, I’d like to stress that people are conflating CCSS and testing, and they’re also conflating CCSS and curriculum. It’d be nice for people to separate their issues.

And one last thing. We as a country don’t understand common anything. We don’t see advantages of standards. Think about how useful it is to have standards, though. My recent project is a website called Illustrative Mathematics, which could not exist without standards. it’s a national community of teachers figuring out what they need to know – across state lines. That’s neat, and it’s only one of many benefits of having shared standards.

Researching the Common Core

I’m in the middle of researching the Common Core standards for math. So far I’ve watched a Diane Ravitch talk, which I blogged about here, which was interesting but raised more questions than it answered, at least for me.

I’ve also interviewed Bill McCallum this week, who was a lead writer and chair of the Work Team that wrote the Common Core standards for mathematics. I’m still writing up that interview but I should have it done soon.

Next up I plan to interview a long-time teacher and current principal of a Brooklyn-based girls school for math and science, Kiri Soares, on her perspective on the Common Core standards and standardized tests in general.

One thing I can say already for sure: people who are not insiders here conflate a bunch of different issues. I’m hoping to at least separate them and understand where people stand on each issue, and if I at the very least get to the point of agreeing to disagree on well-defined points then I will have done my job.

Tell me if you think I need to go further to fully understand the issues at hand. Of course one thing I’m not doing is delving directly into the content of the standards, and that may very well be essential to understanding them. I’d love your thoughts.

Guest Post: Beauty, even in the teaching of mathematics

This is a guest post by Manya Raman-Sundström.

Mathematical Beauty

If you talk to a mathematician about what she or he does, pretty soon it will surface that one reason for working those long hours on those difficult problems has to do with beauty.

Whatever we mean by that term, whether it is the way things hang together, or the sheer simplicity of a result found in a jungle of complexity, beauty – or aesthetics more generally—is often cited as one of the main rewards for the work, and in some cases the main motivating factor for doing this work. Indeed, the fact that a proof of known theorem can be published just because it is more elegant is one evidence of this fact.

Mathematics is beautiful. Any mathematician will tell you that. Then why is it that when we teach mathematics we tend not to bring out the beauty? We would consider it odd to teach music via scales and theory without ever giving children a chance to listen to a symphony. So why do we teach mathematics in bits and pieces without exposing students to the real thing, the full aesthetic experience?

Of course there are marvelous teachers out there who do manage to bring out the beauty and excitement and maybe even the depth of mathematics, but aesthetics is not something we tend to value at a curricular level. The new Common Core Standards that most US states have adopted as their curricular blueprint do not mention beauty as a goal. Neither do the curriculum guidelines of most countries, western or eastern (one exception is Korea).

Mathematics teaching is about achievement, not about aesthetic appreciation, a fact that test-makers are probably grateful for – can you imagine the makeover needed for the SAT if we started to try to measure aesthetic appreciation?

Why Does Beauty Matter?

First, it should be a bit troubling that our mathematics classrooms do not mirror practice. How can young people make wise decisions about whether they should continue to study mathematics if they have never really seen mathematics?

Second, to overlook the aesthetic components of mathematical thought might be to preventing our children from developing their intellectual capacities.

In the 1970s Seymour Papert , a well-known mathematician and educator, claimed that scientific thought consisted of three components: cognitive, affective, and aesthetic (for some discussion on aesthetics, see here).

At the time, research in education was almost entirely cognitive. In the last couple decades, the role of affect in thinking has become better understood, and now appears visibly in national curriculum documents. Enjoying mathematics, it turns out, is important for learning it. However, aesthetics is still largely overlooked.

Recently Nathalie Sinclair, of Simon Frasier University, has shown that children can develop aesthetic appreciation, even at a young age, somewhat analogously to mathematicians. But this kind of research is very far, currently, from making an impact on teaching on a broad scale.

Once one starts to take seriously the aesthetic nature of mathematics, one quickly meets some very tough (but quite interesting!) questions. What do we mean by beauty? How do we characterise it? Is beauty subjective, or objective (or neither? or both?) Is beauty something that can be taught, or does it just come to be experienced over time?

These questions, despite their allure, have not been fully explored. Several mathematicians (Hardy, Poincare, Rota) have speculated, but there is no definite answer even on the question of what characterizes beauty.

Example

To see why these questions might be of interest to anyone but hard-core philosophers, let’s look at an example. Consider the famous question, answered supposedly by Gauss, of the sum of the first n integers. Think about your favorite proof of this. Probably the proof that did NOT come to your mind first was a proof by induction:

Prove that S(n) = 1 + 2 + 3 … + n = n (n+1) /2

S(k + 1) = S(k) + (k + 1)

= k(k + 1)/2 + 2(k + 1)/2

= k(k + 1)/2 + 2(k + 1)/2

= (k + 1)(k + 2)/2.

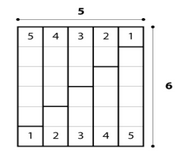

Now compare this proof to another well known one. I will give the picture and leave the details to you:

Does one of these strike you as nicer, or more explanatory, or perhaps even more beautiful than the other? My guess is that you will find the second one more appealing once you see that it is two sequences put together, giving an area of n (n+1), so S(n) = n (n+1)/2.

Note: another nice proof of this theorem, of course, is the one where S(n) is written both forwards and backwards and added. That proof also involves a visual component, as well as an algebraic one. See here for this and a few other proofs.

Beauty vs. Explanation

How often do we, as teachers, stop and think about the aesthetic merits of a proof? What is it, exactly, that makes the explanatory proof more attractive? In what way does the presentation of the proof make the key ideas accessible, and does this accessibility affect our sense of understanding, and what underpins the feeling that one has found exactly the right proof or exactly the right picture or exactly the right argument?

Beauty and explanation, while not obvious related (see here), might at least be bed-fellows. It may be the case that what lies at the bottom of explanation — a feeling of understanding, or a sense that one can ”see” what is going on — is also related to the aesthetic rewards we get when we find a particularly good solution.

Perhaps our minds are drawn to what is easiest to grasp, which brings us back to central questions of teaching and learning: how do we best present mathematics in a way that makes it understandable, clear, and perhaps even beautiful? These questions might all be related.

Workshop on Math Beauty

This March 10-12, 2014 in Umeå, Sweden, a group will gather to discuss this topic. Specifically, we will look at the question of whether mathematical beauty has anything to do with mathematical explanation. And if so, whether the two might have anything to do with visualization.

If this discussion peaks your interest at all, you are welcome to check out my blog on math beauty. There you will find a link to the workshop, with a fantastic lineup of philosophers, mathematicians, and mathematics educators who will come together to try to make some progress on these hard questions.

Thanks to Cathy, the always fabulous mathbabe, for letting me take up her space to share the news of this workshop (and perhaps get someone out there excited about this research area). Perhaps she, or you if you have read this far, would be willing to share your own favorite examples of beautiful mathematics. Some examples have already been collected here, please add yours.

Diane Ravitch speaks in Westchester

One thing I learned on the “Public Facing Math” panel at the JMM was that I needed to know more about the Common Core, since so much of the audience was very interested in discussing it and since it was actually a huge factor in the public’s perception of math, both in the sense of high school math curriculum and in the context of the associated mathematical models related to assessments. In fact at that panel I promised to learned more about the Common Core and I urged other mathematicians in the room to do the same.

As part of my research I listened to a recent lecture that Diane Ravitch gave in Westchester which centered on the Common Core. The video of the lecture is available here.

Diane Ravitch

If you don’t know anything about Diane Ravitch, you should. She’s got a super interesting history in education – she’s an education historian – and in particular has worked high up, as the U.S. Assistant Secretary of Education and on the National Assessment Governing Board, which supervises the National Assessment of Educational Progress.

What’s most interesting about her is that, as a high ranking person in education, she originally supported the Bush “No Child Left Behind” policy but now is an outspoken opponent of it as well as Obama’s “Race to the Top“, which she claims in an extension of the same bad idea.

Ravitch writes an incredibly interesting blog on education issues and, what’s most interesting to me, assessment issues.

Ravitch in Westchester

Let me summarize her remarks in a free-form and incomplete way. If you want to know exactly what she said and how she said it, watch the video, and feel free to skip the first 16 minutes of introductions.

She doesn’t like the Common Core initiative and mentions that Gates Foundation people, mostly not experienced educators, and many of them associated to the testing industry, developed the Common Core standards. So there’s a suspicion right off the bat that the material is overly academic and unrealistic for actual teachers in actual classrooms.

She also objects to the idea of any fixed and untested set of standards. No standard is perfect, and this one is rigid. At the very least, if we need a “one solution for all” kind of standard, it needs to be under constant review and testing and open to revisions – a living document to change with the times and with the needs and limits of classrooms.

So now we have an unrealistic and rigid set of standards, written by outsiders with vested interests, and it’s all for the sake of being able to test everyone to death. She also made some remarks about the crappiness of the Value-Added Model similar to stuff I’ve mentioned in the past.

The Common Core initiative, she explains, exposes an underlying and incorrect mindset, which is that testing makes kids learn, and more testing makes kids learn faster. That setting a high bar makes kids suddenly be able to jump higher. The Common Core, she says, is that higher bar. But just because you raise standards doesn’t mean people suddenly know more.

In fact, she got a leaked copy of last year’s Common Core test and saw that it’s 5th grade version is similar to a current 8th grade standardized test. So it’s very much this “raise the bar” setup. And it points to the fact that standardized testing is used as punishment rather than diagnostic.

In other words, if we were interested in finding out who needs help and giving them help, we wouldn’t need harder and harder tests, we’d just look at who is struggling with the current tests and go help them. But because it’s all about punishment, we need to add causality and blame to the environment.

She claims that poverty causes kids to underperform in schools, and blaming the teachers on poverty is a huge distraction and meaningless for those kids. In fact, she asks, what are going to happen to all of those kids who fail the Common Core standards? What is going to become of them if we don’t allow them to graduate? And how do we think we are helping them? Why do we spend so much time with developing these fancy tests and on assessments instead of figuring out how to help them graduate?

She also points out that the blame game going on in this country is fueled by bad facts.

For example, there is no actual educational emergency in this country. In fact, test scores and graduation rates have never been higher for each racial group. And, although we are alway made to be afraid vis a vis our “international competition” (great recent example of this here) we actually historically never scored at the top of international rankings. But we didn’t think that meant we weren’t competitive 50 years ago, so why do we suddenly care now?

She provides the answer. Namely, if people are convinced there is an emergency in education, then the private companies – test prep and testing companies as well as companies that run charter school – stand to make big money from our response and from straight up privatization.

The statistical argument that poverty causes educational delays is ready to be made. If we want to “fix our educational system” then we need to address poverty, not scapegoat teachers.

How do you define success for a calculus MOOC?

I’m going to strike now, while the conversational iron is hot, and ask people to define success for a calculus MOOC.

Why?

I’ve already mostly explained why in this recent post, but just in case you missed it, I think mathematics is being threatened by calculus MOOCs, and although maybe in some possibly futures this wouldn’t be a bad thing, in others it definitely would.

One way it could be a really truly bad thing is if the metric of success were as perverted as we’ve seen happen in high school teaching, where Value-Added Models have no defined metric of success and are tearing up a generation of students and teachers, creating the kind of opaque, confusing, and threatening environment where code errors lead to people getting fired.

And yes, it’s kind of weird to define success in a systematic way given that calculus has been taught in a lot of places for a long time without such a rigid concept. And it’s quite possible that flexibility should be built in to the definition, so as to acknowledge that different contexts need different outcomes.

Let’s keep things as complicated as they need to be to get things right!

The problem with large-scale models is that they are easier to build if you have some fixed definition of success against which to optimize. And if we mathematicians don’t get busy thinking this through, my fear is that administrations will do it for us, and will come up with things based strictly on money and not so much on pedagogy.

So what should we try?

Here’s what I consider to be a critical idea to get started:

- Calculus teachers should start experimenting with teaching calculus in different ways. Do randomized experiments with different calculus sections that meet at comparable times (I say comparable because I’ve noticed that people who show up for 8am sections are typically more motivated students, so don’t pit them against 4pm sections).

- Try out a bunch of different possible definitions of success, including the experience and attitude of the students and the teacher.

- So for example, it could be how students perform on the final, which should be consistent for both sections (although to do that fairly you need to make sure the MOOC you’re using covers the critical material to do the final).

- Or it could be partly an oral exam or long-form written exam, whether students have learned to discuss the concepts (keeping in mind that we have to compare the “MOOC” students to the standardly taught students).

- Design the questions you will ask your students and yourself before the semester begins so as to practice good model design – we don’t want to decide on our metric after the fact. A great way to do this is to keep a blog with your plan carefully described – that will timestamp the plan and allow others to comment.

- Of course there’s more than one way to incorporate MOOCs in the curriculum, so I’d suggest more than one experiment.

- And of course the success of the experiment will also depend on the teaching style of the calc prof.

- Finally, share your results with the world so we can all start thinking in terms of what works and for whom.

One last comment. One might complain that, if we do this, we’re actually speeding on our own deaths by accelerating the MOOCs in the classroom. But I think it’s important we take control before someone else does.

If it’s hocus pocus then it’s not math

A few days ago there was a kerfuffle over this “numberphile” video, which was blogged about in Slate here by Phil Plait in his “Bad Astronomy” column, with a followup post here with an apology and a great quote from my friend Jordan Ellenberg.

The original video is hideous and should never have gotten attention in the first place. I say that not because the subject couldn’t have been done well – it could have, for sure – but because it was done so poorly that it ends up being destructive to the public’s most basic understanding of math and in particular positive versus negative numbers. My least favorite line from the crappy video:

I was trying to come up with an intuitive reason for this I and I just couldn’t. You have to do the mathematical hocus pocus to see it.

What??

Anything that is hocus pocus isn’t actually math. And people who don’t understand that shouldn’t be making math videos for public consumption, especially ones that have MSRI’s logo on them and get written up in Slate. Yuck!

I’m not going to just vent about the cultural context, though, I’m going to mention what the actual mathematical object of study was in this video. Namely, it’s an argument that “prove” that we have the following identity:

Wait, how can that be? Isn’t the left hand side positive and the right hand side negative?!

This mathematical argument is familiar to me – in fact it is very much along the lines of stuff we sometimes cover at the math summer program HCSSiM I teach at sometimes (see my notes from 2012 here). But in the case of HCSSiM, we do it quite differently. Specifically, we use it as a demonstration of flawed mathematical thinking. Then we take note and make sure we’re more careful in the future.

If you watch the video, you will see the flaw almost immediately. Namely, it starts with the question of what the value is of the infinite sum

But here’s the thing, that doesn’t actually have a value. That is, it doesn’t have a value until you assign it a value, which you can do but then you might want to absolutely positively must explain how you’ve done so. Instead of that explanation, the guy in the video just acts like it’s obvious and uses that “fact,” along with a bunch of super careless moving around of terms in infinite sums, to infer the above outrageous identity.

To be clear, sometimes infinite sums do have pretty intuitive and reasonable values (even though you should be careful to acknowledge that they too are assigned rather than “true”). For example, any geometric series where each successive term gets smaller has an actual “converging sum”. The most canonical example of this is the following:

What’s nice about this sum is that it is naively plausible. Our intuition from elementary school is corroborated when we think about eating half a cake, then another quarter, and then half of what’s left, and so on, and it makes sense to us that, if we did that forever (or if we did that increasingly quickly) we’d end up eating the whole cake.

This concept has a name, and it’s convergence, and it jibes with our sense of what would happen “if we kept doing stuff forever (again at possibly increasing speed).” The amounts we’ve measured on the way to forever are called partial sums, and we make sure they converge to the answer. In the example above the partial sums are and so on, and they definitely converge to 1.

There’s a mathematical way of defining convergence of series like this that the geometric series follows but that the series does not. Namely, you guess the answer, and to make sure you’ve got the right one, you make sure that all of the partial sums are very very close to that answer if you go far enough, for any definition of “very very close.”

So if you want it to get within 0.00001, there’s a number N so that, after the Nth partial sum, all partial sums are within 0.00001 of the answer. And so on.

Notice that if you take the partial sums of the series you get the sequence

which doesn’t get closer and closer to anything. That’s another way of saying that there is no naively plausible value for this infinite sum.

As for the first infinite sum we came across, the that does have a naively plausible value, which we call “infinity.” Totally cool and satisfying to your intuition that you worked so hard to achieve in high school.

But here’s the thing. Mathematicians are pretty clever, so they haven’t stopped there, and they’ve assigned a value to the infinite sum in spite of these pesky intuition issues, namely

, and in a weird mathematical universe of their construction, which is wildly useful in some contexts, that value is internally consistent with other crazy-ass things. One of those other crazy-ass things is the original identity

[Note: what would be really cool is if a mathematician made a video explaining the crazy-ass universe and why it’s useful and in what contexts. This might be hard and it’s not my expertise but I for one would love to watch that video.]

That doesn’t mean the identity is “true” in any intuitively plausible sense of the word. It means that mathematicians are scrappy.

Now here’s my last point, and it’s the only place I disagree somewhat (I think) with Jordan in his tweets. Namely, I really do think that the intuitive definition is qualitatively different from what I’ve termed the “crazy-ass” definition. Maybe not in a context where you’re talking to other mathematicians, and everyone is sufficiently sophisticated to know what’s going on, but definitely in the context of explaining math to the public where you can rely on number sense and (hopefully!) a strong intuition that positive numbers can’t suddenly become negative numbers.

Specifically, if you can’t make any sense of it, intuitive or otherwise, and if you have to ascribe it to “mathematical hocus pocus,” then you’re definitely doing something wrong. Please stop.

The coming Calculus MOOC Revolution and the end of math research

I don’t usually like to sound like a doomsayer but today I’m going to make an exception. I’m going to describe an effect that I believe will be present, even if it’s not as strong as I am suggesting it might be. There are three points to my post today.

1) Math research is a byproduct of calculus teaching

I’ve said it before, calculus (and pre-calculus, and linear algebra) might be a thorn in many math teachers’ side, and boring to teach over and over again, but it’s the bread and butter of math departments. I’ve heard statistics that 85% of students who take any class in math at a given college take only calculus.

Math research is essentially funded through these teaching jobs. This is less true for the super elite institutions which might have their own army of calculus adjuncts and have separate sources of funding both from NSF-like entities and private entities, but if you take the group of people I just saw at JMM you have a bunch of people who essentially depend on their take-home salary to do research, and their take-home salary depends on lots of students at their school taking service courses.

I wish I had a graph comparing the number of student enrolled in calculus each year versus the number of papers published in math journals each year. That would be a great graphic to have, and I think it would make my point.

2) Calculus MOOCs and other web tools are going to start replacing calculus teaching very soon and at a large scale

It’s already happening at Penn through Coursera. Word on the street is it is about to happen at MIT through EdX.

If this isn’t feasible right now it will be soon. Right now the average calculus class might be better than the best MOOC, especially if you consider asking questions and getting a human response. But as the calculus version of math overflow springs into existence with a record of every question and every answer provided, it will become less and less important to have a Ph.D. mathematician present.

Which isn’t to say we won’t need a person at all – we might well need someone. But chances are they won’t be tenured, and chances are they could be overseas in a call center.

This is not really a bad thing in theory, at least for the students, as long as they actually learn the stuff (as compared to now). Once the appropriate tools have been written and deployed and populated, the students may be better off and happier. They will very likely be more adept at finding correct answers for their calculus questions online, which may be a way of evaluating success (although not mine).

It’s called progress, and machines have been doing it for more than a hundred years, replacing skilled craftspeople. It hurts at first but then the world adjusts. And after all, lots of people complain now about teaching boring classes, and they will get relief. But then again many of them will have to find other jobs.

Colleges might take a hit from parents about how expensive they are and how they’re just getting the kids to learn via computer. And maybe they will actually lower tuition, but my guess is they’ll come up with something else they are offering that makes up for it which will have nothing to do with the math department.

3) Math researchers will be severely reduced if nothing is done

Let’s put those two things together, and what we see is that math research, which we’ve basically been getting for free all this time, as a byproduct of calculus, will be severely curtailed. Not at the small elite institutions that don’t mind paying for it, but at the rest of the country. That’s a lot of research. In terms of scale, my guess is that the average faculty will be reduced by more than 50%, and some faculties will be closed altogether.

Why isn’t anything being done? Why do mathematicians seem so asleep at this wheel? Why aren’t they making the case that math research is vital to a long-term functioning society?

My theory is that mathematicians haven’t been promoting their work for the simple reason that they haven’t had to, because they had this cash cow called calculus which many of them aren’t even aware of as a great thing (because close up it’s often a pain).

It’s possible that mathematicians don’t even know how to promote math to the general public, at least right now. But I’m thinking that’s going to change. We’re going to think about it pretty hard and learn how to promote math research very soon, or else we’re going back to 1850 levels of math research, where everyone knew each other and stuff was done by letter.

How worried am I about this?

For my friends with tenure, not so worried, except if their entire department is at risk. But for my younger friends who are interested in going to grad school now, I’m not writing them letters of recommendation before having this talk, because they’ll be looking around for tenured positions in about 10 years, and that’s the time scale at which I think math departments will be shrinking instead of expanding.

In terms of math PR, I’m also pretty worried, but not hopeless. I think one can really make the case that basic math research should be supported and expanded, but it’s going to take a lot of things going right and a lot of people willing to put time and organizing skills into the effort for it to work. And hopefully it will be a community effort and not controlled by a few billionaires.

JMM

It occurs to me, as I prepare to join my panel this afternoon on Public Facing Math, that I’ve been to more Joint Math Meetings in the 7 years since I left academic math (3) than I did in the 17 years I was actually in math (2). I include my undergraduate years in that count because when I was a junior in college I went to Vancouver for the JMM and I met Cora Sadosky, which was probably my favorite conference ever.

Anyhoo I’m on my way to one of the highlights of any JMM, the HCSSiM breakfast, where we hang out with students and teachers from summers long ago and where I do my best to convince the director Kelly and myself that I should come back next summer to teach again. Then after that I spend 4 months at home convincing my family that it’s a great plan. Woohoo!

Besides the above plan, I plan to meet people in the hallways and gossip. That’s all I have ever accomplished here. I hope it is the official mission of the conference, but I’m not sure.

How do I know if I’m good enough to go into math?

Hi Cathy,

I met you this past summer, you may not remember me. I have a question.

I know a lot of people who know much more math than I do and who figure out solutions to problems more quickly than me. Whenever I come up with a solution to a problem that I’m really proud of and that I worked really hard on, they talk about how they’ve seen that problem before and all the stuff they know about it. How do I know if I’m good enough to go into math?

Thanks,

High School Kid

Dear High School Kid,

Great question, and I’m glad I can answer it, because I had almost the same experience when I was in high school and I didn’t have anyone to ask. And if you don’t mind, I’m going to answer it to anyone who reads my blog, just in case there are other young people wondering this, and especially girls, but of course not only girls.

Here’s the thing. There’s always someone faster than you. And it feels bad, especially when you feel slow, and especially when that person cares about being fast, because all of a sudden, in your confusion about all sort of things, speed seems important. But it’s not a race. Mathematics is patient and doesn’t mind. Think of it, your slowness, or lack of quickness, as a style thing but not as a shortcoming.

Why style? Over the years I’ve found that slow mathematicians have a different thing to offer than fast mathematicians, although there are exceptions (Bjorn Poonen comes to mind, who is fast but thinks things through like a slow mathematician. Love that guy). I totally didn’t define this but I think it’s true, and other mathematicians, weigh in please.

One thing that’s incredibly annoying about this concept of “fastness” when it comes to solving math problems is that, as a high school kid, you’re surrounded by math competitions, which all kind of suck. They make it seem like, to be “good” at math, you have to be fast. That’s really just not true once you grow up and start doing grownup math.

In reality, mostly of being good at math is really about how much you want to spend your time doing math. And I guess it’s true that if you’re slower you have to want to spend more time doing math, but if you love doing math then that’s totally fine. Plus, thinking about things overnight always helps me. So sleeping about math counts as time spent doing math.

[As an aside, I have figured things out so often in my sleep that it’s become my preferred way of working on problems. I often wonder if there’s a “math part” of my brain which I don’t have normal access to but which furiously works on questions during the night. That is, if I’ve spent the requisite time during the day trying to figure it out. In any case, when it works, I wake up the next morning just simply knowing the proof and it actually seems obvious. It’s just like magic.]

So here’s my advice to you, high school kid. Ignore your surroundings, ignore the math competitions, and especially ignore the annoying kids who care about doing fast math. They will slowly recede as you go to college and as high school algebra gives way to college algebra and then Galois Theory. As the math gets awesomer, the speed gets slower.

And in terms of your identity, let yourself fancy yourself a mathematician, or an astronaut, or an engineer, or whatever, because you don’t have to know exactly what it’ll be yet. But promise me you’ll take some math major courses, some real ones like Galois Theory (take Galois Theory!) and for goodness sakes don’t close off any options because of some false definition of “good at math” or because some dude (or possibly dudette) needs to care about knowing everything quickly. Believe me, as you know more you will realize more and more how little you know.

One last thing. Math is not a competitive sport. It’s one of the only existing truly crowd-sourced projects of society, and that makes it highly collaborative and community-oriented, even if the awards and prizes and media narratives about “precocious geniuses” would have you believing the opposite. And once again, it’s been around a long time and is patient to be added to by you when you have the love and time and will to do so.

Love,

Cathy

The case against algebra II

There’s an interesting debate described in this essay, Wrong Answer: the case against Algebra II, by Nicholson Baker (hat tip Nicholas Evangelos) around the requirement of algebra II to go to college. I’ll do my best to summarize the positions briefly. I’m making some of the pro-side up since it wasn’t well-articulated in the article.

On the pro-algebra side, we have the argument that learning algebra II promotes abstract thinking. It’s the first time you go from thinking about ratios of integers to ratios of polynomial functions, and where you consider the geometric properties of these generalized fractions. It is a convenient litmus test for even more abstraction: sure, it’s kind of abstract, but on the other hand you can also for the most part draw pictures of what’s going on, to keep things concrete. In that sense you might see it as a launching pad for the world of truly abstract geometric concepts.

Plus, doing well in algebra II is a signal for doing well in college and in later life. Plus, if we remove it as a requirement we might as well admit we’re dumbing down college: we’re giving the message that you can be a college graduate even if you can’t do math beyond adding fractions. And if that’s what college means, why have college? What happened to standards? And how is this preparing our young people to be competitive on a national or international scale?

On the anti-algebra side, we see a lot of empathy for struggling and suffering students. We see that raising so-called standards only gives them more suffering but no more understanding or clarity. And although we’re not sure if that’s because the subject is taught badly or because the subject is inherently unappealing or unattainable, it’s clear that wishful thinking won’t close this gap.

Plus, of course doing well in algebra II is a signal for doing well in college, it’s a freaking prerequisite for going to college. We might as well have embroidery as a prerequisite and then be impressed by all the beautiful piano stool covers that result. Finally, the standards aren’t going up just because we’re training a new generation in how to game a standardized test in an abstract rote-memorization skill of formulas and rules. It’s more like learning student’s capacity for drudgery.

OK, so now I’m going to make comments.

While it’s certainly true that, in the best of situations, the content of algebra II promotes abstract and logical thinking, it’s easy for me to believe, based on my very small experience in the matter that, it’s much more often taught poorly, and the students are expected to memorize formulas and rules. This makes it easier to test but doesn’t add to anyone’s love for math, including people who actually love math.

Speaking of my experience, it’s an important issue. Keep in mind that asking the population of mathematicians what they think of removing a high school class is asking for trouble. This is a group of people who pretty much across the board didn’t have any problems whatsoever with the class in question and sailed through it, possibly with a teacher dedicated to teaching honors students. They likely can’t remember much about their experience, and if they can it probably wasn’t bad.

Plus, removing a math requirement, any math requirement, will seem to a mathematician like an indictment of their field as not as important as it used to be to the world, which is always a bad thing. In other words, even if someone’s job isn’t directly on the line with this issue of algebra II, which it undoubtedly is for thousands of math teachers and college teachers, then even so it’s got a slippery slope feel, and pretty soon we’re going to have math departments shrinking over this.

In other words, it shouldn’t surprised anyone that we have defensive and unsympathetic mathematicians on one side who cannot understand the arguments of the empathizers on the other hand.

Of course, it’s always a difficult decision to remove a requirement. It’s much easier to make the case for a new one than to take one away, except of course for the students who have to work for the ensuing credentials.

And another thing, not so long ago we’d hear people say that women don’t need education at all, or that peasants don’t need to know how to read. Saying that a basic math course should become and elective kind of smells like that too if you want to get histrionic about things.

For myself, I’m willing to get rid of all of it, all the math classes ever taught, at least as a thought experiment, and then put shit back that we think actually adds value. So I still think we all need to know our multiplication tables and basic arithmetic, and even basic algebra so we can deal with an unknown or two. But from then on it’s all up in the air. Abstract reasoning is great, but it can be done in context just as well as in geometry class.

And, coming as I now do from data science, I don’t see why statistics is never taught in high school (at least in mine it wasn’t, please correct me if I’m wrong). It seems pretty clear we can chuck trigonometry out the window, and focus on getting the average high school student up to the point of scientific literacy that she can read a paper in a medical journal and understand what the experiment was and what the results mean. Or at the very least be able to read media reports of the studies and have some sense of statistical significance. That’d be a pretty cool goal, to get people to be able to read the newspaper.

So sure, get rid of algebra II, but don’t stop there. Think about what is actually useful and interesting and mathematical and see if we can’t improve things beyond just removing one crappy class.

MAA Distinguished Lecture Series: Start Your Own Netflix

I’m on my way to D.C. today to give an alleged “distinguished lecture” to a group of mathematics enthusiasts. I misspoke in a previous post where I characterized the audience to consist of math teachers. In fact, I’ve been told it will consist primarily of people with some mathematical background, with typically a handful of high school teachers, a few interested members of the public, and a number of high school and college students included in the group.

So I’m going to try my best to explain three different ways of approaching recommendation engine building for services such as Netflix. I’ll be giving high-level descriptions of a latent factor model (this movie is violent and we’ve noticed you like violent movies), of the co-visitation model (lots of people who’ve seen stuff you’ve seen also saw this movie) and the latent topic model (we’ve noticed you like movies about the Hungarian 1956 Revolution). Then I’m going to give some indication of the issues in doing these massive-scale calculation and how it can be worked out.

And yes, I double-checked with those guys over at Netflix, I am allowed to use their name as long as I make sure people know there’s no affiliation.

In addition to the actual lecture, the MAA is having me give a 10-minute TED-like talk for their website as well as an interview. I am psyched by how easy it is to prepare my slides for that short version using prezi, since I just removed a bunch of nodes on the path of the material without removing the material itself. I will make that short version available when it comes online, and I also plan to share the longer prezi publicly.

[As an aside, and not to sound like an advertiser for prezi (no affiliation with them either!), but they have a free version and the resulting slides are pretty cool. If you want to be able to keep your prezis private you have to pay, but not as much as you’d need to pay for powerpoint. Of course there’s always Open Office.]

Train reading: Wrong Answer: the case against Algebra II, by Nicholson Baker, which was handed to me emphatically by my friend Nick. Apparently I need to read this and have an opinion.

“Here and Now” is shilling for the College Board

Did you think public radio doesn’t have advertising? Think again.

Last week Here and Now’s host Jeremy Hobson set up College Board’s James Montoya for a perfect advertisement regarding a story on SAT scores going down. The transcript and recording are here (hat tip Becky Jaffe).

To set it up, they talk about how GPA’s are going up on average over the country but how, at the same time, the average SAT score went down last year.

Somehow the interpretation of this is that there’s grade inflation and that kids must be in need of more test prep because they’re dumber.

What is the College Board?

You might think, especially if you listen to this interview, that the college board is a thoughtful non-profit dedicated to getting kids prepared for college.

Make no mistake about it: the College Board is a big business, and much of their money comes from selling test prep stuff on top of administering tests. Here are a couple of things you might want to know about College Board through its wikipedia page:

Consumer rights organization Americans for Educational Testing Reform (AETR) has criticized College Board for violating its non-profit status through excessive profits and exorbitant executive compensation; nineteen of its executives make more than $300,000 per year, with CEO Gaston Caperton earning $1.3 million in 2009 (including deferred compensation).[10][11] AETR also claims that College Board is acting unethically by selling test preparation materials, directly lobbying legislators and government officials, and refusing to acknowledge test-taker rights.[12]

Anyhoo, let’s just say it this way: College Board has the ability to create an “emergency” about SAT scores, by say changing the test or making it harder, and then the only “reasonable response” is to pay for yet more test prep. And somehow Here and Now’s host Jeremy Hobson didn’t see this coming at all.

The interview

Here’s an excerpt:

HOBSON: It also suggests, when you look at the year-over-year scores, the averages, that things are getting worse, not better, because if I look at, for example, in critical reading in 2006, the average being 503, and now it’s 496. Same deal in math and writing. They’ve gone down.

MONTOYA: Well, at the same time that we have seen the scores go down, what’s very interesting is that we have seen the average GPAs reported going up. So, for example, when we look at SAT test takers this year, 48 percent reported having a GPA in the A range compared to 45 percent last year, compared to 44 percent in 2011, I think, suggesting that there simply have to be more rigor in core courses.

HOBSON: Well, and maybe that there’s grade inflation going on.

MONTOYA: Well, clearly, that there is grade inflation. There is no question about that. And it’s one of the reasons why standardized test scores are so important in the admission office. I know that, as a former dean of admission, test scores help gauge the meaning of a GPA, particularly given the fact that nearly half of all SAT takers are reporting a GPA in the A range.

Just to be super clear about the shilling, here’s Hobson a bit later in the interview:

HOBSON: Well – and we should say that your report noted – since you mentioned practice – that as is the case with the ACT, the students who take the rigorous prep courses do better on the SAT.

What does it really mean when SAT scores go down?

Here’s the thing. SAT scores are fucked with ALL THE TIME. Traditionally, they had to make SAT’s harder since people were getting better at them. As test-makers, they want a good bell curve, so they need to adjust the test as the population changes and as their habits of test prep change.

The result is that SAT tests are different every year, so just saying that the scores went down from year to year is meaningless. Even if the same group of kids took those two different tests in the same year, they’d have different scores.

Also, according to my friend Becky who works with kids preparing for the SAT, they really did make substantial changes recently in the math section, changing the function notation, which makes it much harder for kids to parse the questions. In other words, they switched something around to give kids reason to pay for more test prep.

Important: this has nothing to do with their knowledge, it has to do with their training for this specific test.

If you want to understand the issues outside of math, take for example the essay. According to this critique, the number one criterion for essay grade is length. Length trumps clarity of expression, relevance of the supporting arguments to the thesis, mechanics, and all other elements of quality writing. As my friend Becky says:

I have coached high school students on the SAT for years and have found time and again, much to my chagrin, that students receive top scores for long essays even if they are desultory, tangent-filled and riddled with sentence fragments, run-ons, and spelling errors.

Similarly, I have consistently seen students receive low scores for shorter essays that are thoughtful and sophisticated, logical and coherent, stylish and articulate.

As long as the number one criterion for receiving a high score on the SAT essay is length, students will be confused as to what constitutes successful college writing and scoring well on the written portion of the exam will remain essentially meaningless. High-scoring students will have to unlearn the strategies that led to success on the SAT essay and relearn the fundamentals of written expression in a college writing class.

If the College Board (the makers of the SAT) is so concerned about the dumbing down of American children, they should examine their own role in lowering and distorting the standards for written expression.

Conclusion

Two things. First, shame on College Board and James Montoya for acting like SAT scores are somehow beacons of truth without acknowledging the fiddling that goes on time and time again by his company. And second, shame on Here and Now and Jemery Hobson for being utterly naive and buying in entirely to this scare tactic.

The art of definition

Definitions are basic objects in mathematics. Even so, I’ve never seen the art of definition explicitly taught, and I have rarely seen the need for a definition explicitly discussed.

Have you ever noticed how damn hard it is to make a good definition and yet how utterly useful a good definition can be?

The basic definitions inform the research of any field, and a good definition will lead to better theorems than a bad one. If you get them right, if you really nail down the definition, then everything works out much more cleanly than otherwise.

So for example, it doesn’t make sense to work in algebraic geometry without the concepts of affine and projective space, and varieties, and schemes. They are to algebraic geometry like circles and triangles are to elementary geometry. You define your objects, then you see how they act and how they interact.

I saw first hand how a good definition improves clarity of thought back in grad school. I was lucky enough to talk to John Tate (my mathematical hero) about my thesis, and after listening to me go on for some time with a simple object but complicated proofs, he suggested that I add an extra sentence to my basic object, an assumption with a fixed structure.

This gave me a bit more explaining to do up front – but even there added intuition – and greatly simplified the statement and proofs of my theorems. It also improved my talks about my thesis. I could now go in and spend some time motivating the definition, and then state the resulting theorem very cleanly once people were convinced.

Another example from my husband’s grad seminar this semester: he’s starting out with the concept of triangulated categories coming from Verdier’s thesis. One mysterious part of the definition involves the so-called “octahedral axiom,” which mathematicians have been grappling with ever since it was invented. As far as Johan tells it, people struggle with why it’s necessary but not that it’s necessary, or at least something very much like it. What’s amazing is that Verdier managed to get it right when he was so young.

Why? Because definition building is naturally iterative, and it can take years to get it right. It’s not an obvious process. I have no doubt that many arguments were once fought over whether the most basic definitions, although I’m no historian. There’s a whole evolutionary struggle that I can imagine could take place as well – people could make the wrong definition, and the community would not be able to prove good stuff about that, so it would eventually give way to stronger, more robust definitions. Better to start out carefully.

Going back to that. I think it’s strange that the building up of definitions is not explicitly taught. I think it’s a result of the way math is taught as if it’s already known, so the mystery of how people came up with the theorems is almost hidden, never mind the original objects and questions about them. For that matter, it’s not often discussed why we care whether a given theorem is important, just whether it’s true. Somehow the “importance” conversations happen in quiet voices over wine at the seminar dinners.

Personally, I got just as much out of Tate’s help with my thesis as anything else about my thesis. The crystalline focus that he helped me achieve with the correct choice of the “basic object of study” has made me want to do that every single time I embark on a project, in data science or elsewhere.

Experimentation in education – still a long way to go

Yesterday’s New York Times ran a piece by Gina Kolata on randomized experiments in education. Namely, they’ve started to use randomized experiments like they do in medical trials. Here’s what’s going on:

… a little-known office in the Education Department is starting to get some real data, using a method that has transformed medicine: the randomized clinical trial, in which groups of subjects are randomly assigned to get either an experimental therapy, the standard therapy, a placebo or nothing.

They have preliminary results:

The findings could be transformative, researchers say. For example, one conclusion from the new research is that the choice of instructional materials — textbooks, curriculum guides, homework, quizzes — can affect achievement as profoundly as teachers themselves; a poor choice of materials is at least as bad as a terrible teacher, and a good choice can help offset a bad teacher’s deficiencies.

So far, the office — the Institute of Education Sciences — has supported 175 randomized studies. Some have already concluded; among the findings are that one popular math textbook was demonstrably superior to three competitors, and that a highly touted computer-aided math-instruction program had no effect on how much students learned.

Other studies are under way. Cognitive psychology researchers, for instance, are assessing an experimental math curriculum in Tampa, Fla.

If you go to any of the above links, you’ll see that the metric of success is consistently defined as a standardized test score. That’s the only gauge of improvement. So any “progress” that’s made is by definition measured by such a test.

In other words, if we optimize to this system, we will optimize for textbooks which raise standardized test scores. If it doesn’t improve kids’ test scores, it might as well not be in the book. In fact it will probably “waste time” with respect to raising scores, so there will effectively be a penalty for, say, fun puzzles, or understanding why things are true, or learning to write.

Now, if scores are all we cared about, this could and should be considered progress. Certainly Gina Kolata, the NYTimes journalist, didn’t mention that we might not care only about this – she recorded it as unfettered good, as she was expected to by the Education Department, no doubt. But, as a data scientist who gets paid to think about the feedback loops and side effects of choices like “metrics of success,” I have a problem with it.

I don’t have a thing against randomized tests – using them is a good idea, and will maybe even quiet some noise around all the different curriculums, online and in person. I do think, though, that we need to have more ways of evaluating an educational experience than a test score.

After all, if I take a pill once a day to prevent a disease, then what I care about is whether I get the disease, not which pill I took or what color it was. Medicine is a very outcome- focused discipline in a way that education is not. Of course, there are exceptions, say when the treatment has strong and negative side-effects, and the overall effect is net negative. Kind of like when the teacher raises his or her kids’ scores but also causes them to lose interest in learning.

If we go the way of the randomized trial, why not give the students some self-assessments and review capabilities of their text and their teacher (which is not to say teacher evaluations give clean data, because we know from experience they don’t)? Why not ask the students how they liked the book and how much they care about learning? Why not track the students’ attitudes, self-assessment, and goals for a subject for a few years, since we know longer-term effects are sometimes more important that immediate test score changes?

In other words, I’m calling for collecting more and better data beyond one-dimensional test scores. If you think about it, teenagers get treated better by their cell phone companies or Netflix than by their schools.

I know what you’re thinking – that students are all lazy and would all complain about anyone or anything that gave them extra work. My experience is that kids actually aren’t like this, know the difference between rote work and real learning, and love the learning part.

Another complaint I hear coming – long-term studies take too long and are too expensive. But ultimately these things do matter in the long term, and as we’ve seen in medicine, skimping on experiments often leads to bigger and more expensive problems. Plus, we’re not going to improve education overnight.

And by the way, if and/or when we do this, we need to implement strict privacy policies for the students’ answers – you don’t want a 7-year-old’s attitude about math held against him when he of she applies to college.

Educational accountability scores get politically manipulated again

My buddy Jordan Ellenberg just came out with a fantastic piece in Slate entitled “The Case of the Missing Zeroes: An astonishing act of statistical chutzpah in the Indiana schools’ grade-changing scandal.”

Here are the leading sentences of the piece:

Florida Education Commissioner Tony Bennett resigned Thursday amid claims that, in his former position as superintendent of public instruction in Indiana, he manipulated the state’s system for evaluating school performance. Bennett, a Republican who created an A-to-F grading protocol for Indiana schools as a way to promote educational accountability, is accused of raising the mark for a school operated by a major GOP donor.

Jordan goes on to explain exactly what happened and how that manipulation took place. Turns out it was a pretty outrageous and easy-to-understand lie about missing zeroes which didn’t make any sense. You should read the whole thing, Jordan is a great writer and his fantasy about how he would deal with a student trying the same scam in his calculus class is perfect.

A few comments to make about this story overall.

- First of all, it’s another case of a mathematical model being manipulated for political reasons. It just happens to be a really simple mathematical model in this case, namely a weighted average of scores.

- In other words, the lesson learned for corrupt politicians in the future may well to be sure the formulae are more complicated and thus easier to game.

- Or in other words, let’s think about other examples of this kind of manipulation, where people in power manipulate scores after the fact for their buddies. Where might it be happening now? Look no further than the Value-Added Model for teachers and schools, which literally nobody understands or could prove is being manipulated in any given instance.

- Taking a step further back, let’s remind ourselves that educational accountability models in general are extremely ripe for gaming and manipulation due to their high stakes nature. And the question of who gets the best opportunity to manipulate their scores is, as shown in this example of the GOP-donor-connected school, often a question of who has the best connections.

- In other words, I wonder how much the system can be trusted to give us a good signal on how well schools actually teach (at least how well they teach to the test).

- And if we want that signal to be clear, maybe we should take away the high stakes and literally measure it, with no consequences. Then, instead of punishing schools with bad scores, we could see how they need help.

- The conversation doesn’t profit from our continued crazy high expectations and fundamental belief in the existence of a silver bullet, the latest one being the Kipp Charter Schools – read this reality check if you’re wondering what I’m talking about (hat tip Jordan Ellenberg).

- As any statistician could tell you, any time you have an “educational experiment” involving highly motivated students, parents, and teachers, it will seem like a success. That’s called selection bias. The proof of the pudding lies in the scaling up of the method.

- We need to think longer term and consider how we’re treating good teachers and school administration who have to live under arbitrary and unfair systems. They might just leave.

MOOCs, their failure, and what is college for anyway?

Have you read this recent article in Slate about they canceled online courses at San Jose State University after more than half the students failed? The failure rate ranged from 56 to 76 percent for five basic undergrad classes with a student enrollment limit of 100 people.

Personally, I’m impressed that so many people passed them considering how light-weight the connection is in such course experiences. Maybe it’s because they weren’t free – they cost $150.

It all depends on what you were expecting, I guess. It begs the question of what college is for anyway.

I was talking to a business guy about the MOOC potential for disruption, and he mentioned that, as a Yale undergrad himself, he never learned a thing in classes, that in fact he skipped most of his classes to hang out with his buddies. He somehow thought MOOCs would be a fine replacement for that experience. However, when I asked him whether he still knew any of his buddies from college, he acknowledged that he does business with them all the time.

Personally, this confirms my theory that education is more about making connection than education per se, and although I learned a lot of math in college, I also made a friend who helped me get into grad school and even introduced me to my thesis advisor.

Measuring Up by Daniel Koretz

This is a guest post by Eugene Stern.

Now that I have kids in school, I’ve become a lot more familiar with high-stakes testing, which is the practice of administering standardized tests with major consequences for students who take them (you have to pass to graduate), their teachers (who are often evaluated based on standarized test results), and their school districts (state funding depends on test results). To my great chagrin, New Jersey, where I live, is in the process of putting such a teacher evaluation system in place (for a lot more detail and criticism, see here).

The excellent John Ewing pointed me to a pretty comprehensive survey of standardized testing called “Measuring Up,” by Harvard Ed School prof Daniel Koretz, who teaches a course there about this stuff. If you have any interest in the subject, the book is very much worth your time. But in case you don’t get to it, or just to whet your appetite, here are my top 10 takeaways:

-

Believe it or not, most of the people who write standardized tests aren’t idiots. Building effective tests is a difficult measurement problem! Koretz makes an analogy to political polling, which is a good reminder that a test result is really a sample from a distribution (if you take multiple versions of a test designed to measure the same thing, you won’t do exactly the same each time), and not an absolute measure of what someone knows. It’s also a good reminder that the way questions are phrased can matter a great deal.

-

The reliability of a test is inversely related to the standard deviation of this distribution: a test is reliable if your score on it wouldn’t vary very much from one instance to the next. That’s a function of both the test itself and the circumstances under which people take it. More reliability is better, but the big trade-off is that increasing the sophistication of the test tends to decrease reliability. For example, tests with free form answers can test for a broader range of skills than multiple choice, but they introduce variability across graders, and even the same person may grade the same test differently before and after lunch. More sophisticated tasks also take longer to do (imagine a lab experiment as part of a test), which means fewer questions on the test and a smaller cross-section of topics being sampled, again meaning more noise and less reliability.

-

A complementary issue is bias, which is roughly about people doing better or worse on a test for systematic reasons outside the domain being tested. Again, there are trade-offs: the more sophisticated the test, the more extraneous skills beyond those being tested it may be bringing in. One common way to weed out such questions is to look at how people who score the same on the overall test do on each particular question: if you get variability you didn’t expect, that may be a sign of bias. It’s harder to do this for more sophisticated tests, where each question is a bigger chunk of the overall test. It’s also harder if the bias is systematic across the test.

-

Beyond the (theoretical) distribution from which a single student’s score is a sample, there’s also the (likely more familiar) distribution of scores across students. This depends both on the test and on the population taking it. For example, for many years, students on the eastern side of the US were more likely to take the SAT than those in the west, where only students applying to very selective eastern colleges took the test. Consequently, the score distributions were very different in the east and the west (and average scores tended to be higher in the west), but this didn’t mean that there was bias or that schools in the west were better.

-

The shape of the score distribution across students carries important information about the test. If a test is relatively easy for the students taking it, scores will be clustered to the right of the distribution, while if it’s hard, scores will be clustered to the left. This matters when you’re interpreting results: the first test is worse at discriminating among stronger students and better at discriminating among weaker ones, while the second is the reverse.

-

The score distribution across students is an important tool in communicating results (you may not know right away what a score of 600 on a particular test means, but if you hear it’s one standard deviation above a mean of 500, that’s a decent start). It’s also important for calibrating tests so that the results are comparable from year to year. In general, you want a test to have similar means and variances from one year to the next, but this raises the question of how to handle year-to-year improvement. This is particularly significant when educational goals are expressed in terms of raising standardized test scores.

-

If you think in terms of the statistics of test score distributions, you realize that many of those goals of raising scores quickly are deluded. Koretz has a good phrase for this: the myth of the vanishing variance. The key observation is that test score distributions are very wide, on all tests, everywhere, including countries that we think have much better education systems than we do. The goals we set for student score improvement (typically, a high fraction of all students taking a test several years from now are supposed to score above some threshold) imply a great deal of compression at the lower end of this distribution – compression that has never been seen in any country, anywhere. It sounds good to say that every kid who takes a certain test in four years will score as proficient, but that corresponds to a score distribution with much less variance than you’ll ever see. Maybe we should stop lying to ourselves?

-

Koretz is highly critical of the recent trend to report test results in terms of standards (e.g., how many students score as “proficient”) instead of comparisons (e.g., your score is in the top 20% of all students who took the test). Standards and standard-based reporting are popular because it’s believed that American students’ performance as a group is inadequate. The idea is that being near the top doesn’t mean much if the comparison group is weak, so instead we should focus on making sure every student meets an absolute standard needed for success in life. There are three (at least) problems with this. First, how do you set a standard – i.e., what does proficient mean, anyway? Koretz gives enough detail here to make it clear how arbitrary the standards are. Second, you lose information: in the US, standards are typically expressed in terms of just four bins (advanced, proficient, partially proficient, basic), and variation inside the bins is ignored. Third, even standards-based reporting tends to slide back into comparisons: since we don’t know exactly what proficient means, we’re happiest when our school, or district, or state places ahead of others in the fraction of students classified as proficient.

-

Koretz’s other big theme is score inflation for high-stakes tests: if everyone is evaluated based on test scores, everyone has an incentive to get those scores up, whether or not that actually has much correlation with learning. If you remember anything from the book or from this post, remember this phrase: sawtooth pattern. The idea is that when a new high-stakes standardized test appears, average scores start at some base level, go up quickly as people figure out how to game the test, then plateau. If the test is replaced with another, the same thing happens: base, rapid growth, plateau. Repeat ad infinitum. Koretz and his collaborators did a nice experiment in which they went back to a school district in which one high-stakes test had been replaced with another and administered the first test several years later. Now that teachers weren’t teaching to the first test, scores on it reverted back to the original base level. Moral: score inflation is real, pervasive, and unavoidable, unless we bite the bullet and do away with high-stakes tests.

-