Planning for the robot revolution

Yesterday I read this Wired magazine article about the robot revolution by Kevin Kelly called “Better than Human”. The idea of the article is to make peace with the inevitable robot revolution, and to realize that it’s already happened and that it’s good.

I like this line:

We have preconceptions about how an intelligent robot should look and act, and these can blind us to what is already happening around us. To demand that artificial intelligence be humanlike is the same flawed logic as demanding that artificial flying be birdlike, with flapping wings. Robots will think different. To see how far artificial intelligence has penetrated our lives, we need to shed the idea that they will be humanlike.

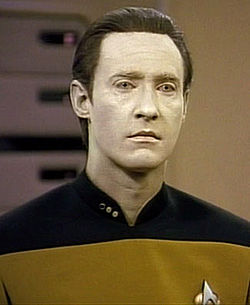

True! Let’s stop looking for a Star Trek Data-esque android (although he is very cool according to my 10-year-old during our most recent Star Trek marathon).

Instead, let’s realize that the typical artificial intelligence we can expect to experience in our lives is the web itself, inasmuch as it is a problem-solving, decision-making system, and our interactions with it through browsing and searching is both how we benefit from artificial intelligence and how it takes us over.

What I can’t accept about the Wired article, though, is the last part, where we should consider it good. But maybe it is only supposed to be good for the Wired audience and I’m asking for too much. My concerns are touched on briefly here:

When robots and automation do our most basic work, making it relatively easy for us to be fed, clothed, and sheltered, then we are free to ask, “What are humans for?”

Here’s the thing: it’s already relatively easy for us to be fed, clothed, and sheltered, but we aren’t doing it. That doesn’t seem to be our goal. So why would it suddenly become our goal because there is increasing automation? Robots won’t change our moral values, as far as I know.

Also, the article obscures economic political reality. First imagines the audience as a land- and robot-owning master:

Imagine you run a small organic farm. Your fleet of worker bots do all the weeding, pest control, and harvesting of produce, as directed by an overseer bot, embodied by a mesh of probes in the soil. One day your task might be to research which variety of heirloom tomato to plant; the next day it might be to update your custom labels. The bots perform everything else that can be measured.

Great, so the landowners will not need any workers at all. But then what about the people who don’t have a job? Oh wait, something magical happens:

Everyone will have access to a personal robot, but simply owning one will not guarantee success. Rather, success will go to those who innovate in the organization, optimization, and customization of the process of getting work done with bots and machines.

Really? Everyone will own a robot? How is that going to work? It doesn’t seem to be a natural progression from our current system. Or maybe they mean like the way people own phones now. But owning a phone doesn’t help you get work done if there’s no work for you to do.

But maybe I’m being too cynical. I’m sure there’s deep thought being put to this question. Oh here, in this part:

I ask Brooks to walk with me through a local McDonald’s and point out the jobs that his kind of robots can replace. He demurs and suggests it might be 30 years before robots will cook for us.

I guess this means we don’t have to worry at all, since 30 years is such a long, long time.

Note that I haven’t read the Wired article, but it seems to me that in most of these projections of future robot bliss they leave out two important parts. 1. There are already too many humans here. 2. That is one of the first things an aware artificial intelligence would notice.

I find it difficult to believe that a rational, logical intelligence would tolerate sharing the planet with us for any longer than it would take for said intelligence to conquer self-repair and replication obstacles. But I may be biased, as a human I find it hard to live amongst us while we treat each other the way we do.

My prediction: in the face of an aware artificial intelligence, humanity will retreat into the oldest massively multiplayer fantasy game we have, organized religion. There we’ll pretend like it isn’t happening or that it’s not our fault.

LikeLike

That depends on what we program the AI for, doesn’t it? Just because it is rational and logical, doesn’t mean we can’t or won’t program it. Someone has to. As nature has taught us genetic algorithms are great, but only if you have lots of time on your hands.

LikeLike

@griznog I think you are ascribing urges to AI that there’s is no reason to assume they’ll have given the way they are currently developing. That *may* change, but for now there’s no reason to think AI would have any goal seeking behavior which would lead them to view us as competition.

@mathbabe you point out my main fear, that the Luddite fallacy is only a fallacy in the short term. The cost of automation to do a given task puts an upper limit on what labor can command for doing that task instead. When the cost of automation drops … well, I think we’re seeing that to some extent now.

LikeLike

We have a very long history of “knowing” how some new development or discovery will turn out. We also have a very long history of being wrong. I don’t think we get to define what AI will ultimately mean and further, once bootstrapped (sounds like a conservative view of AI) we will probably not control it’s ongoing development, if the current state of things could even be described as controlled. Without claiming to “know” our fate, I can’t help but be guided by our past. Which leads to the conclusion that we will all probably be wrong but it’s more likely that the outcome will be far less within our control than we think.

I quote “know” because I am using it in the same sense that my mom uses it to “know” that the world is only 6000 years old. Which is to say that I don’t think reality is what we think it is, no one has a perspective that large. But a sufficient powerful AI may have a perspective that large, which is my point. If it decides that the earth really is 6000 years old, then my mom wins.

LikeLike

The new crop of these in love with robots taking our jobs are too young to have read Limits to Growth or even heard about it.

LikeLike

@John Doe I’m old enough to have read but had never heard of Limits to Growth, so I Amazon’d it. The 2004 30-year Update “just” has four stars. Turns out the two books I have read along these lines (The Long Emergency, and Collapse) also each have just four stars.

There are four books in the “Customers Who Bought This Item Also Bought” section of the Limits to Growth page that each have 4 1/2 stars. One is the 2008 “Thinking in Systems: A Primer” by the lead author (posthumously) of Limits to Growth. Two others came out in 2011.

Just ordered the Systems one, and The End of Growth: Adapting to Our New Economic Reality by Richard Heinberg. Came to $25.03…free shipping!

Nothing like a little true-life, 21st-century horror to pass the day.

LikeLike

As Pogo might have said were he still among us, “The Robots are us,” as we obsessively feed and worship our 4-wheeled machines and build them a growing network of pathways over the world, as we obsessively feed and worship our hand-helds and build them a growing network of pathways over the world. When the A.I.-bot network controlling all the networks becomes self-aware (or hacked and controlled by misanthropes), shall we be primed, willing and able to perpetuate such perfidy?

LikeLike

Here are a couple of links that grapple with that very subject:

http://jacobinmag.com/2011/12/four-futures/

http://noahpinionblog.blogspot.com/2012/08/desire-modification-ultimate-technology.html

LikeLike

I’m not very convinced that humanity will live any better like that either. We’ve all read that in some lost tribes societies are very egalitarian and people are happier but since you can’t unknow the known I guess that the only way is running forward.

Anyway, there is a technology I’m dying to get my hands on: 3D printing. It’s like a modern cornucopia.

LikeLike

The thing is that AI programs have already taken lots of jobs and are likely to take more. You don’t earn a middle class living looking at wiring charts and assigning phone numbers anymore the way you might have back in 1939. Why? Because computers changed the whole way phone numbers are assigned and they do the assigning. You don’t earn a middle class living as a general purpose travel agent anymore the way you might have back in 1999. Why? Because computers with clever algorithms in them do that now. You don’t earn a middle class living spray painting automobile body parts on an assembly line anymore. Why? Because there are robots that can do it faster and better, and they don’t get poisoned by paint fumes, and they can tap into the plant’s computer system better than you can.

You can go down a long list of jobs that have been replaced by artificial intelligence programming and robots. The rule of thumb now is that a $75,000 robot replaces a low end production line worker, and that number is just going down. It’s too easy to get swayed by the glamorous term “artificial intelligence” and start thinking about HAL 9000 setting its own mission priorities or one of the thermo-stellar bombs in Dark Star discovering phenomenology. I took enough AI courses in college to know that while AI tackles hard problems, it spins off less glamorous sounding subfields as it spins off solutions. So, we have things like heuristic optimization, search algorithms, machine vision, game playing programs, non-player characters, voice recognition systems and so on, but not Robby the Robot cranking out bootleg hooch mixed with fusel oil for us.

As for the real world economics of this, well, we’re already facing a crisis of rising productivity failing to produce high enough wages to maintain demand. If everyone increases the productivity of his or her work by 10%, either all wages have to go up 10% or that extra 10% sits on the shelf or in a warehouse somewhere. (There is an alternative approach in which prices remain constant as product quality rises, but this makes it harder to track productivity without some kind of arbitrary metric.)

LikeLike

“As for the real world economics of this, well, we’re already facing a crisis of rising productivity failing to produce high enough wages to maintain demand. ”

Leaving the impact of any future robotic and/or AI developments aside, I see this as a sign of the futility of basing your societies and global economy on growth when you live on a rock of finite size. One of my favorite quotes by Richard Dawkins is

“It is a simple logic truth that, short of mass emigration into space, with rockets taking off at the rate of several million per second, uncontrolled birth-rates are bound to lead to horribly increased death –rates. It is hard to believe that this simple truth is not understood by those leaders who forbid their followers to use effective contraceptive methods. They express a preference for ‘natural’ methods of population limitation, and a natural method is exactly what they are going to get. It is called starvation.”

I think you can apply that logic to the global economy as well, there simply are real, finite and unavoidable limits to growth on this planet and we (assuming “we” are around long enough) *will* learn to live within them, regardless of whether we learn by thinking ahead or the hard way by looking back.

Circling back to the robots/AI, if/when it does all go wrong for our species, I think a robot/AI apocalypse is far more interesting than starvation. For many reasons, not the least of which is I am holding out hope that I’ll be picked as a specimen for the future AI world zoo and get to fling poop at the robots who come to see me.

LikeLike

Love this: “I am holding out hope that I’ll be picked as a specimen for the future AI world zoo and get to fling poop at the robots who come to see me.”

LikeLike

Don’t mean to be a party-pooper here, but by the time they have human zoos, those robots will almost certainly regard that particular substance differently than we humans do.

LikeLike

Today we are cursed by the fact that we each live in our own reality and stumble around handicapped by our limited perception, trying to bend the world around us to what we see or want to see in it. That curse becomes a blessing when I fling my pooh the robots, in my limited human reality it’ll be irreverent, funny and satisfying and I won’t care what the robots think. Go with your strengths.

LikeLike

Point well taken. Amusement is valuable. Tying this in with today’s mathbabe post, in a world of busy adults much of my teenaged perspective seems as valuable as ever to remember and revel in. Spiced up with just a wisp of experiential wisdom, it has been worth retaining.

LikeLike

Sounds good to me…not so far from what we’re doing now! LOL

LikeLike

This author is a Luddite. Robots will take no more jobs than the machines did at the turn of the century a hundred years ago. It will MOVE the jobs around, but there WILL be plenty of jobs. STOP SCARING PEOPLE!

LikeLike

I am curious what sectors you predict those jobs will be in and what they will entail. Say robots learn to pick strawberries, then we have unemployed strawberry pickers. They learn to build roads, we have unemployed road construction workers. Robots advance to the stage of diagnostic medicine and performing invasive procedures, we have unemployed doctors. Basically as robotics and AI advance and each of these formerly human dominated fields falls, exactly where do you see all the people a) getting retrained (and at what expense…) and b) getting jobs? People will be put out of work, need to be retrained, see the new training replaced by robots, be out of work, … Where does the cycle end? Realistically the problem isn’t not enough jobs, the problem is too many people and too many of those people convinced that they need to consume more to be happy and that robots will help them consume more.

But, I have a plan. When it begins to look like robots will take over the world, we start a high priority project to build a robot banker. Shortly after the first one proves it’s worth, you’ll see a quick end to robotic technology advancement. Too big to fail, too big to jail and I do suspect, to big to be replaced by robotics and AI. The oligarchs will change culture (or allow it to change) when the current path threatens their position, and not before. Of course a robot banker would violate the Three Laws by it’s very existence, which is fitting as it needs to be above the law to be a robot banker.

(FWIW, I am a below average system administrator and arrive at work each day expecting to have been replaced by a small shell script. When that happens, my goal is to be retrained as a robot therapist then later, as noted above, a poo flinger.)

LikeLike