Pseudoscience at Gate B6

This is a guest post written by Matt Freeman, an epidemiologist and nurse practitioner. His fields are adolescent and men’s health. He holds a doctorate in nursing from Duke University, a masters in nursing from The Ohio State University, a masters in epidemiology and public health from The Yale School of Medicine, and a Bachelor of Arts from Brandeis University. His blog is located at www.medfly.org.

It was mid-morning on a Saturday. I had only hand luggage, and had checked in online the day before. I arrived at the small airport exactly one hour before departure. I was a bit annoyed that the flight was delayed, but otherwise not expecting too much trouble. It was a 90-minute flight on a 70-seat regional jet.

By my best estimate, there were 80 passengers waiting to enter the security checkpoint. Most seemed to be leisure travelers: families with little kids, older adults. There was an abundance of sunburn and golf shirts.

The queue inched along. As I looked around, anxiety was escalating. There was a lot of chatter about missing flights; several people were in tears knowing that they would certainly have their travel plans fall into disarray.

One TSA employee with two stripes on his lapels walked his way through the increasingly antsy crowd.

“What is the province of your destination?” He asked the woman next to me.

“Province?”

“Yes, which province? British Columbia? Ontario?”

Confused, the woman replied, “I’m going to Houston. I don’t know what province that’s in.”

The TSA agent scoffed. He moved on to the next passenger. “The same question for you, ma’am. What is the province of your destination?”

The woman didn’t speak, handing over her driver’s license and boarding card, assuming that was what he wanted. He stared back with disdain.

There are no flights from this airport to Canada.

When it was my turn, I volunteered, “I’m going to Texas, not Canada.”

“What are the whereabouts of your luggage?” He asked.

“Their whereabouts? My bag is right here next to me.”

“Yes, what are its whereabouts?”

“It’s right here.”

“And that’s its whereabouts?”

This was seeming like a grammatical question.

“And about its contents? Are you aware of them?”

“Yes,” I replied, quizzically.

He moved on.

I missed my flight. The woman next to me met the same fate. She cried. I cringed. We pleaded with the airline agent for clemency. The plane pushed back from the gate with many passengers waiting to be asked about the whereabouts of their belongings or their province of destination.

The agent asking the strange questions and delaying the flights was a part of the SPOT program.

The SPOT Program

In 2006, the US Transportation Security Administration (TSA) introduced “SPOT: Screening Passengers by Observational Techniques.” The concept was to identify nonverbal indicators that a passenger was engaged in foul play. Some years after the program started, the US Government Accounting Office (GAO) declared that, “no scientific evidence exists to support the detection of or inference of future behavior.”

SPOT is expensive too. The GAO reported that the program has cost more than $900 million since its inauguration. That is just the cost of training staff and operating the program, not the costs incurred by delayed or detained passengers.

The “Science” Behind Behavioral Techniques

The SPOT program was developed by multiple sources, but there is one most prominent psychologist in the field: Paul Ekman PhD.

Ekman published Emotion in the Human Face, which demonstrated that six basic human emotions: anger, sadness, fear, happiness, surprise, and disgust, are universally expressed on the human face. Ekman had travelled to New Guinea to show that facial expressions did not vary across geography or culture.

Ekman’s theory was undisputed for 20 years until Lisa Feldman Barrett PhD showed that Ekman’s research required observers to select from the list of six emotions. When observers were asked to analyze emotions without a list, there was some reliability in the recognition of happiness and fear. The others emotions could not be distinguished.

When confronted with skepticism from scientists, Ekman declined to release the details of his research for peer review.

Charles Honts, Ph.D., attempted to replicate Ekman’s findings at the University of Utah. No dice. Ekman’s “secret” findings could not be replicated. Maria Hartwig PhD, a psychologist at City University of New York’s John Jay College of Criminal Justice, described Ekman’s work as, “a leap of gargantuan dimensions not supported by scientific evidence.”

When asked directly, a TSA analyst pointed to the work of David Givens, Ph.D., an anthropologist and author. Givens has published popular works on body language, but Givens explained that the TSA did not specify which elements of his own theories were adopted by the TSA, and the TSA never asked him.

The TSA’s Response

When asked for statistics, TSA analyst Carl Maccario cited one anecdote of a passenger who was “rocking back and forth strangely,” and was later found to have been carrying fuel bottles that contained flammable materials. The TSA described these items as, “the makings of a pipe bomb,” but there was no evidence that the passenger was doing anything other than carrying a dangerous substance in his hand luggage. There was nothing to suggest that he planned to hurt anyone.

A single anecdote is not research, and this was a weak story at best.

When the GAO investigated further, they analyzed the data of 232,000 passengers who were identified by “behavioral detection” as cause for concern. Of the 232,000, there were 1,710 arrests. These arrests were mostly due to outstanding arrest warrants, and there is no evidence that any were ever linked to terrorist activity.

What Criteria Are Used in the SPOT Program?

In 2015, The Intercept published the TSA’s worksheet for behavioral detection officers. Here it is:

As much as the TSA’s behavioral detection mathematical model is hilarious, it is also frightening. The model seeks to identify whistling and shaving.

As much as the TSA’s behavioral detection mathematical model is hilarious, it is also frightening. The model seeks to identify whistling and shaving.

If I score myself before a typical flight, I earn eight points, which assigned me to the highest risk category. If one followed the paperwork, I should have been referred for extensive screening and law enforcement was to be notified.

Considering that the criteria include yawning, whistling, a subjectively fast “eye blink rate,” “strong body odor” and head turning, just about everyone reaches the SPOT threshold.

The Risk of Scoring

Looking past the absence of evidence, there are further problems with the SPOT worksheet. “Scored” decisions can detract common sense. It does not matter if a hijacker or terrorist fails to whistle or blinks at a normal rate if he or she blows up the airplane.

The Israeli Method

As an Israeli national, I became accustomed to the envied security techniques employed at Israel’s four commercial airports.

The agents employed by the Israeli Airports Authority (IAA) do indeed “profile” passengers, but their efforts are often quicker, easier, and arguably more sensitive.

The questions are usually reasonable and fast. “Where have your bags been since you packed them?” “Did anyone give you anything to take with you?” “Are you carrying anything that could be used as a weapon?”

The IAA is cautious about race and religion. The worst attack on Israeli air transportation took place in 1972 at Ben Gurion Airport. Twenty-six people were killed. The assailants were Japanese, posing as tourists. Since that attack, the IAA has attempted to include ethnicity and religion only as components of its screening process.

Although many have published horror stories, the overwhelming majority of passengers do not encounter anything extraordinary at Israeli airports. The agents are usually young, bubbly, right out of their army service, and eager to show off any language skills they may have acquired.

Is There a Better Answer?

Israel does not publish statistics, and I could not tell you if their system is any better. The difference is one of attitude: most of the IAA staff are kind, calm, and not interested in hassling anyone. They do not care how fast you are blinking or if you shave.

Given the amount of air travel to, from, and within the United States, I doubt that questioning passengers would ever work. The TSA lacks the organization, multilingual skills, and service mentality of the Israel Airports Authority.

The TSA already has one answer, but they chose not to use it in my case. I am a member of the Department of Homeland Security’s “Global Entry” program. This means that I was subject to a background check, interview, and fingerprinting. The Department of Homeland Security vetted my credentials and deemed that I did not present any extraordinary risks, and could therefore use its “PreCheck” lane. But this airport had decided to close its PreCheck lane that day. And their SPOT agent had no knowledge that I had already been vetted through databases and fingerprints… arguably a more reliable system than having him determine if I blinked too rapidly.

Until 2015, the PreCheck program also meant that one need not pass through a full-body scanning machine, in part because the machines are famously slow and inaccurate. They are particularly problematic for those with disabilities and other medical conditions. But the TSA decided that it would switch to random use of full body scanners even for those passengers who had already been vetted. Lines grew longer; no weapons have been discovered.

Looking Forward

- The SPOT program has been proven to be ineffective. There is no rational reason to keep it in place.

- There must not be quotas or incentives for detailed searches and questioning in the absence of probable cause.

- Passengers consenting to a search should have the right to know what the search entails, particularly if it involves odd interrogation techniques that can lead to missing one’s flight.

- The TSA should respect previous court rulings that the search process begins when a passenger consents to being searched. Asking questions outside of the TSA’s custodial area of the airport is questionable for legal reasons.

- Reduce lines. The attacks in Rome and Vienna were more than four decades ago, but that has not dissuaded the TSA. Get the queue moving quickly, thereby reducing the opportunity for an attack. The more recent attack in Brussels still did not change TSA policy.

- Stratified screening, such as the PreCheck program, makes sense. But it TSA staff elect to ignore the program, then it is no longer useful.

References

Benton H, Carter M, Heath D, and Neff J. The Warning. The Seattle Times. 23 July 2002.

Borland J. Maybe surveillance is bad, after all. Wired. 8 August 2007.

Dicker K. Yes, the TSA is probably profiling you and it’s scientifically bogus. Business Insider. 6 May 2015.

Herring A. The new face of emotion. Northeastern Magazine. Spring 2014.

Kerr O. Do travelers have a right to leave airport security areas without the TSA’s Permission. The Washington Post. 6 April 2014.

Martin H. Conversations are more effective for screening passengers, study finds. The Los Angeles Times. 16 November 2014.

The men who stare at airline passengers. The Economist. 6 June 2010.

Segura L. Feeling nervous? 3,000 Behavioral Detection Officers will be watching you at the airport this thanksgiving. Alternet. 23 November 2009

Smith T. Next in line for the TSA? A thorough ‘chat down.’ National Public Radio. 16 August 2011.

Wallis R. Lockerbie: The Story and the Lessons. London: Praeger. 2000.

Weinberger S. Intent to deceive: Can the science of deception detection help catch terrorists? Nature. 465:27. May 2010.

US House of Representatives. Behavioral Science and Security: Evaluating the TSA’s SPOT Program. Hearing Before the Subcommittee on Investigation and Oversight. Committee on Science, Space, and Technology. Serial 112-11. 6 April 2011.

What you tweet could cost you

Yesterday I came across this Reuters article by

In insurance Big Data could lower rates for optimistic tweeters.

The title employs a common marketing rule. Frame bad news as good news. Instead of saying, Big data shifts costs to pessimistic tweeters, mention only those who will benefit.

So, what’s going on? In the usual big data fashion, it’s not entirely clear. But the idea is your future health will be measured by your tweets and your premium will go up if it’s bad news. From the article:

In a study cited by the Swiss group last month, researchers found Twitter data alone a more reliable predictor of heart disease than all standard health and socioeconomic measures combined.

Geographic regions represented by particularly high use of negative-emotion and expletive words corresponded to higher occurrences of fatal heart disease in those communities.

To be clear, no insurance company is currently using Twitter data against anyone (or for anyone), at least not openly. The idea outlined in the article is that people could set up accounts to share their personal data with companies like insurance companies, as a way of showing off their healthiness. They’d be using a company like digi.me to do this. Monetize your data and so on. Of course, that would be the case at the beginning, to train the algorithm. Later on who knows.

While we’re on the topic of Twitter, I don’t know if I’ve had time to blog about University of Maryland Computer Science Professor Jennifer Golbeck. I met Professor Golbeck in D.C. last month when she interviewed me at Busboys and Poets. During that discussion she mentioned her paper, Predicting Personality from Social Media Text, in which she inferred personality traits from Twitter data. Here’s the abstract:

This paper replicates text-based Big Five personality score predictions generated by the Receptiviti API—a tool built on and tied to the popular psycholinguistic analysis tool Linguistic Inquiry and Word Count (LIWC). We use four social media datasets with posts and personality scores for nearly 9,000 users to determine the accuracy of the Receptiviti predictions. We found Mean Absolute Error rates in the 15–30% range, which is a higher error rate than other personality prediction algorithms in the literature. Preliminary analysis suggests relative scores between groups of subjects may be maintained, which may be sufficient for many applications.

Here’s how the topic came up. I was mentioning Kyle Behm, a young man I wrote about in my book who was denied a job based on a “big data” personality test. The case is problematic. It could represent a violation of the Americans with Disability Act, and a lawsuit filed in court is pending.

What Professor Golbeck demonstrates with her research is that, in the future, the employers won’t even need to notify applicants that their personalities are being scored at all, it could happen without their knowledge, through their social media posts and other culled information.

I’ll end with this quote from Christian Mumenthaler, CEO of Swiss Re, one of the insurance companies dabbling in Twitter data:

I personally would be cautious what I publish on the internet.

At the Wisconsin Book Festival!

I arrived in Madison last night and had a ridiculously fantastic meal at Forequarter in Madison thanks to my friends Shamus and Jonny of the Underground Food Collective.

Delicious!

I’m here to give a talk at the Wisconsin Book Festival, which will take place today at noon, and I’m excited to have my buddy Jordan Ellenberg introduce me at my talk.

I’ll also stop by beforehand at WORT for a conversation with Patty Peltekos on her show called A Public Affair, as well as afterwards at the local NPR station, WPR, for a show called To The Best of Our Knowledge. These might be recorded, I don’t know when they’re airing.

What a city! Very welcoming and fun. I should visit more often.

Facebook’s Child Workforce

I’ve become comfortable with my gadfly role in technology. I know that Facebook would characterize their new “personalized learning” initiative, Summit Basecamp, as innovative if not downright charitable (hat tip Leonie Haimson). But again, gadly.

What gets to me is how the students involved – about 20,000 students in more than 100 charter and traditional public schools – are really no more than an experimental and unpaid workforce, spending classroom hours training the Summit algorithm and getting no guarantee in return of real learning.

Their parents, moreover, are being pressured to sign away all sorts of privacy rights for those kids. And, get this, Basecamp “require disputes to be resolved through arbitration, essentially barring a student’s family from suing if they think data has been misused.” Here’s the quote from the article that got me seriously annoyed, from the Summit CEO Diane Tavenner herself:

“We’re offering this for free to people,” she said. “If we don’t protect the organization, anyone could sue us for anything — which seems crazy to me.”

To recap. Facebook gets these kids to train their algorithm for free, whilst removing them from their classroom time, offering no evidence that they will learn anything, making sure that they’ll be able to use the childrens’ data for everything short of targeted ads, and also ensuring the parents can’t even hire a lawyer to complain. That sounds like a truly terrible deal.

Here’s the thing. The kids involved are often poor, often minority. They are the most surveilled generation and the most surveilled subpopulation out there, ever. We have to start doing better for them than unpaid work for Facebook.

Guest post: An IT insider’s mistake

This is a guest post by an IT Director for a Fortune 500 company who has worked with many businesses and government agencies.

It was my mistake. My daughter’s old cell phone had died. My wife offered to get a new phone from Verizon and give that to me and then give my daughter my old phone. Since I work with Microsoft it made sense for me to get the latest Nokia Lumia model. It’s a great looking phone, with a fantastic camera, and a much bigger screen than my old model. I told my wife not to wipe all the data off my old phone but to just get the phone numbers switched, and we could then delete all my contacts from my old phone. While you can remove an email account on the phone, you can’t change the account that is associated with Windows Phone’s cloud. So my daughter manually deleted all my phone contacts and added her own to my old phone – but before that I had synced up my new phone to the cloud and got all my contacts downloaded to it. Within 24 hours, the Microsoft Azure cloud had re-synced both phones, so now all the deletes my daughter did propagated to my new phone.

I lost all my contacts.

I panicked, went back to the Verizon store, and they told me that we had to flash my old phone to factory settings. But they didn’t have a way for me to get my contacts back. And they had no way for me to contact Microsoft directly to get them back either. The Windows Phone website lists no contact phone number for customer support – Microsoft relies on the phone carriers to provide this, apparently believing that being a phone manufacturer doesn’t require you to have a call center that can resolve consumer issues. I see this as a policy flaw.

I had the painstaking process of figuring out how to get my phone contacts back, maybe one at a time.

But the whole cloud syncing made me think about how we’ve now come to trust that we can have everything on our phones and not think about adequately backing it up. In 2012, the Wired reporter Mat Honan reported about how a hacker systematically deleted all his personal information including baby photos on his Apple devices he had saved to the cloud. The big three phone manufacturers now (Apple, Google and Microsoft) have a lot of personal information in their clouds about all of us cell phone users. Each company, on its own, can each create a Kevin Bacon style “six degrees of separation” contacts map that would make the NSA proud. While I lost over 100 or more phone contacts, each one of those people would likely also have a similar or more contacts plugged into their phones, and so on. If the big three (AGM, not to be confused with Annual General Meetings) colluded together, they could even create a real time locator map showing where all our contacts are right now all round the world. Think of the possibilities for tracking: cheating spouses, late lunches at work, what time you quit drinking at the local, what sporting events you go to, which clients your competitors are meeting with etc. Microsoft’s acquisition of LinkedIn makes this sharing of information even more powerful. Now they’ll have our phone numbers and email contacts and some professional correspondence too.

I don’t trust Google. Their motto of “don’t be evil”, almost begs the question why do they have to remind themselves of that? Some years ago they were reported as scanning emails written to and from Gmail accounts. Spying on what your customers think of as private correspondence comes to my mind as evil. And just last week Yahoo admits to doing the same thing on behalf of the government, scanning for a very specific search phrase. I hope the NSA got their suspect with that request, and it wasn’t just a trial balloon to see how far they could go with pressuring the big data providers and aggregators. Yes, I can see the guys in suits and dark glasses approaching Marissa Mayer, “Trust us, this will save lives. We believe there’s the risk of an imminent terrorist attack”. I hope they arrest someone and bring charges, even if to justify Marissa’s position.

So why do I bring all that up? I believe we need consumer personal data protection rights. Almost like credit reporting. The big three (AGM) personal data aggregators and Facebook and LinkedIn collect a lot of personal data about each of us. We should have the right to know what they keep about us, and to possibly correct that record, like we do with the credit bureaus. We should be able to get a free digital copy of our personal data at least annually. The personal data aggregators should also have to report who they share that information with, and in what form. Do they pass along our phone contact information, or email accounts to 3 rd party providers or license that to other companies to help them do their business? The Europeans are ahead of America in protecting privacy rights on the internet, with the right to be forgotten, and the right to correct data. We should not be left behind in making our lives safer from invasion of our privacy and loss of personal security.

We need to know. The personal data aggregators need to be held to higher standards.

New America event next Monday

Hey D.C. folks!

I’ll be back in your area next Monday for an event at the New America Foundation from noon to 1:30pm. It will also be livestreamed.

It’s going to be a panel discussion with some super interesting folks:

David Robinson is co-founder and principal at Upturn, a team of technologists working to give people a meaningful voice in how technology shapes their lives. David leads the firm’s work on automated decisions in the criminal justice system.

Rachel Levinson-Waldman is senior counsel to the Liberty and National Security Program at the Brennan Center for Justice. She is an expert on surveillance technology and national security issues, and a frequent commentator on the intersection of policing, technology and civil rights.

Daniel Castro is vice president at the Information Technology and Innovation Foundation (ITIF) and director of ITIF’s Center for Data Innovation. He was appointed by U.S. Secretary of Commerce Penny Pritzker to the Commerce Data Advisory Council.

K. Sabeel Rahman is an assistant professor of law at Brooklyn Law School, a Eric and Wendy Schmidt fellow at New America, and a Four Freedoms fellow at the Roosevelt Institute. He is the author of Democracy Against Domination (Oxford University Press 2017), and studies the history, values, and policy strategies that animate efforts to make our society more inclusive and democratic, and our economy more equitable.

Also, I wrote an essay for New America in preparation for the event, entitled Alien Algorithms.

I hope I see you next Monday!

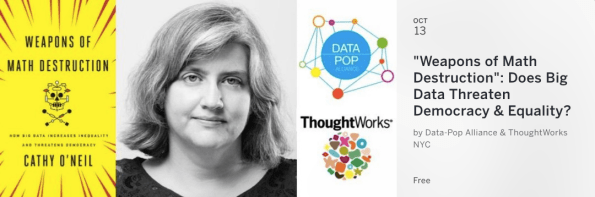

Three upcoming NY events, starting tonight at Thoughtworks

I’ve got three upcoming New York events I wanted people to know about.

Thoughtworks/ Data-Pop Alliance Tonight

First, I’ll be speaking tonight starting at 6:30pm at Thoughtworks, at 99 Madison Ave. It’s co-hosted by Data-Pop Alliance, and after giving a brief talk about my book I’ll be joined for a panel discussion by Augustin Chaintreau (Columbia University), moderated by Emmanuel Letouzé (Data-Pop Alliance and MIT Media Lab). There will be Q&A as well. More here.

Betaworks next week

Next I’ll be talking with the folks at Betaworks about my book next Thursday evening, starting at 6:30pm, at 29 Little West 12th Street. You can get more information and register for the event here.

Data & Society in two weeks

Finally, if the world of New York City data hasn’t gotten sick of hearing from me, I’ll be giving a “Databite” (with whiskey!) at Data & Society the afternoon of Wednesday, October 26th, starting at 4pm. Data & Society is located at 36 West 20th Street, 11th Floor. I will update this post with an registration link when I have it.

Facial Recognition is getting really accurate, and we have not prepared

There’s reason to believe facial recognition software is getting very accurate. According to a WSJ article by Laura Mills, Facial Recognition Software Advances Trigger Worries, a Russian company called NTechLab has built software that “correctly matches 73% of people to large photo database.” The stat comes from celebrities recognized in a database of a million pictures.

Now comes the creepy part. The company, headed by two 20-something Russian tech dudes, are not worried about the ethics of their algorithms. Here are their reasons:

- Because it’s already too late to worry. In the words of one of the founders, “There is no private life.”

- They don’t need to draw a line in the sand for who they give this technology to, because“we don’t receive requests from strange people.”

- Also, the technology should be welcomed, rather than condemned, because according to the founders, “There is always a conflict between progress and some scared people,” he said. “But in any way, progress wins.”

Thanks for the assurance!

Let’s compare the above reasons to not worry to the below reasons we have to worry, which include:

- The founders are in negotiations to sell their products to state-affiliated security firms from China and Turkey.

- Moscow’s city government is planning to install NTechLab’s technology on security cameras around the city.

- They were already involved in a scandal in which people used their software to identify and harass women who had allegedly acted in pornographic films online in Russia.

A Plethora Of Podcasts

I’ve been busy recording podcasts recently, and I wanted to collect some of them together for your convenience and listening pleasure.

New York Times Book Review and more!

Please forgive me for posting constantly about the amazing press my book is getting, I’m milking this once-in-a-lifetime opportunity.

Because, HOLY SHIT!

Clay Shirky wrote a review of my book in the NY Times Book Review in the context of a Reader’s Guide To This Fall’s Big Book Awards. It has this attractive accompanying graphic:

Also, I talked with Russ Roberts a while back about my book and it’s now on EconTalk as a podcast.

Also, when I visited London last week I made a trip to the Guardian offices and recorded a Guardian Science podcast which also features Douglas Rushkoff.

Harvard Bookstore Tonight & In New Yorker

I’m on my way to Boston by train today to for a book talk and Q&A at the Harvard Bookstore in Harvard Square at 7pm. More information here.

Please join if you’re around, I’d love to see you.

Also!

My book and my bluegrass band The TomTown Ramblers were featured in this week’s Talk of The Town in the New Yorker. I’m hoping this means we’ll end the day with more than 34 followers on Twitter.

For the record, we sent them pics of the band but they opted for this illustration instead. Very New Yorkerish.

Other recent mentions of my book:

- Boston Globe‘s Kate Tuttle interviewed me about my book.

- I was quoted in the WSJ by Christopher Mims in an article about Facebook and political microtargeting.

- My book was referred to in this excellent New Yorker article about online discrimination by Tammy Kim.

The “One of Many” Fallacy

I’ve been on book tour for nearly a month now, and I’ve come across a bunch of arguments pushing against my book’s theses. I welcome them, because I want to be informed. So far, though, I haven’t been convinced I made any egregious errors.

Here’s an example of an argument I’ve seen consistently when it comes to the defense of the teacher value-added model (VAM) scores, and sometimes the recidivism risk scores as well. Namely, that the teacher’s VAM scores were “one of many considerations” taken to establish an overall teacher’s score. The use of something that is unfair is less unfair, in other words, if you also use other things which balance it out and are fair.

If you don’t know what a VAM is, or what my critique about it is, take a look at this post, or read my book. The very short version is that it’s little better than a random number generator.

The obvious irony of the “one of many” argument is, besides the mathematical one I will make below, that the VAM was supposed to actually have a real effect on teachers assessments, and that effect was meant to be valuable and objective. So any argument about it which basically implies that it’s okay to use it because it has very little power seems odd and self-defeating.

Sometimes it’s true that a single inconsistent or badly conceived ingredient in an overall score is diluted by the other stronger and fairer assessment constituents. But I’d argue that this is not the case for how teachers’ VAM scores work in their overall teacher evaluations.

Here’s what I learned by researching and talking to people who build teacher scores. That most of the other things they use – primarily scores derived from categorical evaluations by principals, teachers, and outsider observers – have very little variance. Almost all teachers are considered “acceptable” or “excellent” by those measurements, so they all turn into the same number or numbers when scored. That’s not a lot to work with, if the bottom 60% of teachers have essentially the same score, and you’re trying to locate the worst 2% of teachers.

The VAM was brought in precisely to introduce variance to the overall mix. You introduce numeric VAM scores so that there’s more “spread” between teachers, so you can rank them and you’ll be sure to get teachers at the bottom.

But if those VAM scores are actually meaningless, or at least extremely noisy, then what you have is “spread” without accuracy. And it doesn’t help to mix in the other scores.

In a statistical sense, even if you allow 50% or more of a given teacher’s score to consist of non-VAM information, the VAM score will still dominate the variance of a teacher’s score. Which is to say, the VAM score will comprise much more than 50% of the information that goes into the score.

An extreme version of this is to think about making the non-VAM 50% of a teacher’s score always exactly the same. Denote it by 50. When we take the population of teacher VAM scores and average them with 50, the population of teacher VAM scores are now between 25 and 75, instead of 0 and 100, but besides being squished into a smaller range, they haven’t changed with respect to each other. Their relative rankings, in particular, do not change. So whoever was unlucky enough to get a bad VAM score will still be on the bottom.

y=(x+50)/2

This holds true for other choices of “50” as well.

A word about recidivism risk scores. It’s true that judges use all sorts of information in determining a defendant’s sentencing, or bail, or parole. But if one of the most trusted and most statistically variant ones is flawed – and in this case racist – then a similar argument to the above could be made, and the conclusion would be as follows: the overall effect of using flawed recidivism risk scores is stronger, rather than weaker, than one might expect given its weighting. We have to be more worried about it, not less.

Stuff I’ve been reading this week

- The Vagina Dispatches (must watch video)

- Massive Yahoo data breach

- Gaming the Mayo markets

- Big data meets insurance, there’s an explosion

- Wells Fargo employees were fired for whistleblowing

- Low-value customers are NOT always right

- Amazon does not give customers the best deal

- Why the mainstream media is ignoring the nationwide prison strike

- Gun rights are for white people

- Drowning in systemic injustice

I also discussed a bunch of these topics on this week’s Slate Money.

In London next week

I’m flying to London Sunday night to conduct my UK book tour. Here’s the schedule so far:

Cambridge University

Date: Tuesday, September 27th

Time: 12:30pm

Place: Faculty of Education, 184 Hills Road, Cambridge

More info: here

London’s How To Academy

Date: Tuesday, September 27th

Time: 6:45pm

Place: CNCFD- Condé Nast College of Fashion & Design, 16-17 Greek Street, Soho, London

More info: here

King’s College London

Date: Wednesday, September 28th

Time: 3pm

Place: S-2.08, King’s College London, Strand, London

More info: here

In addition to the above, I’ll also be on BBC’s Today Programme on Tuesday morning, and I’ll be interviewed by Significance, the Royal Statistical Society & American Statistical Association magazine, the Guardian Science podcast, and Business Daily for BBC’s The World Service.

WMD articles and interviews

I haven’t been posting too often, in part because I’ve been traveling a lot on book tour, and also because I’ve been writing for other things and interviewing quite a bit. Today I wanted to share some of that stuff.

- I wrote a Q&A for Jacobin called Welcome to the Black Box.

- I wrote a piece for Slate called How Big Data Transformed Applying to College.

- Times Higher Education chose my book as their reviewed Book of the Week and had a nice spread about it.

There may be more, and I’ll post them when I remember them.

Also, great news! My book is a best-seller in Canada! Those Canadians are just the smartest.

When statisticians ignore statistics

This article about recidivism risk algorithms in use in Philadelphia really bothers me (hat tip Meredith Broussard). Here’s the excerpt that gets my goat:

“As a Black male,” Cobb asked Penn statistician and resident expert Richard Berk, “should I be afraid of risk assessment tools?”

“No,” Berk said, without skipping a beat. “You gotta tell me a lot more about yourself. … At what age were you first arrested? What is the date of your most recent crime? What are you charged with?”

Let me translate that for you. Cobb is speaking as a black man, then Berk, who is a criminologist and statistician, responds to Cobb as an individual.

In other words, Cobb is asking whether black men are systematically discriminated against by this recidivism risk model. Berk answers that he, individually, might not be.

This is not a reasonable answer. It’s obviously true that any process, even discriminatory processes that have disparate impact on people of color, might have exceptions. They might not always discriminate. But when someone who is not a statistician asks whether black men should be worried, then the expert needs to interpret that appropriately – as a statistical question.

And maybe I’m overreacting – maybe that was an incomplete quote, and maybe Berk, who has been charged with building a risk tool for $100,000 for the city of Philadelphia, went on to say that risk tools in general are absolutely capable of systematically discriminating against black men.

Even so, it bothers me that he said “no” so quickly. The concern that Cobb brought up is absolutely warranted, and the correct answer would have been “yes, in general, that’s a valid concern.”

I’m glad that later on he admits that there’s a trade-off between fairness and accuracy, and that he shouldn’t be the one deciding how to make that trade-off. That’s true.

However, I’d hope a legal expert could have piped in at that moment to mention that we are constitutionally guaranteed fairness, so the trade-off between accuracy and fairness should not really up for discussion at all.

Let’s hear it for Penn Station bathrooms!

I don’t know about you, but every time I go into the bathroom at Penn Station I cry a little bit.

That’s because I remember the 1980’s version of them, and believe you me, they’re so much better now. I grew up in the Boston area but I visited a bunch in high school, which means I spent way too much time in the very few available public toilet facilities. So I can appreciate me some improved amenities.

They are relatively clean! They have toilet paper, consistently! There’s soap available next to working sinks! And, probably most importantly, it’s not a threatening experience with dirty needles all over the floor.

For that matter, while I’m on the theme, have you noticed how much nicer JFK is now compared to 1988? Maybe it’s because I’ve been flying JetBlue a lot, but that terminal is nothing like the broken-down middle school experience I remember not so fondly.

That’s all I have today, just gratitude and anti-nostalgia. And I’m sure there are lots of things we miss as well from those days of New York City, but right now I can’t think of any besides cheaper rent.

National Book Awards Longlist Finalist!

It’s been an amazing two weeks – or actually, holy crap, only 10 days – since my book launched.

I found out two days ago that my book made it onto the Longlist for the National Book Award in Nonfiction, along with 9 other books. I haven’t had time to read the other books, but I did want to mention that last year’s NBA Nonfiction winner was Ta-Nehisi Coates’s excellent Between The World And Me, which I highly recommend.

What’s exciting about being on this list is that it means the ideas in the book will get exposure. So many really excellent books never get read by many people, because of bad timing, or small marketing and publicity budgets, or just bad luck. I’m so lucky to have a book that’s been given an extremely generous amount of all of that.

This week I’ve been busy on the West Coast going to book events and giving talks. My last one is today at noon in Berkeley (820 Barrows Hall). I’ve gotten almost no sleep what with jetlag, weird traveling requirements, and pure adrenaline, but it’s been absolutely incredible.

It’s been especially fantastic to meet the people who come to these events, which so far have taken place in Seattle, the San Francisco area, and a couple in New York last week. It seems like almost every person has something to tell me, a story of algorithms they encounter at work, or that their friends do, or questions about how to get a job that they can feel proud of in data science. Some of them are lawyers offering to talk to me about FOIA law or the Privacy Act. Incredible.

Some people who have read the book already will tell me it really changed their perspective, and others will tell me they’ve been waiting years for this book to be written, because it echoes their experience and long-held skepticism.

What?! Do you guys know what that means? It means the book is working!

In any case I’m overwhelmed and grateful to be able to talk to all of them and to start and continue the conversation. It’s never been more timely, and although I had hoped to get the book out sooner, I actually ended up thinking the timing couldn’t be better.

There’s only one thing. I wish I could send a message back to myself four years ago, when I decided to write the book, or even better, to Christmas 2014, when I was convinced it was an unwritable book. I’d just want to send some encouragement, a signal that it would eventually cohere. Those were some dark days, as my family can attest to.

Luckily for me, I had good friends who kept me from losing all hope. Thank you, blog readers, and thank you friends, and thank you Jordan and Laura especially, you guys are the best!

More creepy models

One of the best things about having my book out (finally!) is that once people read it, or hear interviews or read blogs about it, they sometimes sending me more examples of creepy models. I have two examples today.

The first is a company called Joberate which scores current employees (with a “J-score”) on their risk of looking for a job (hat tip Marc Sobel). Kind of like an overbearing, nosy boss, but in automated and scaled digital form. They claim they’re not creepy because they use only publicly available data.

Next, we’ve got Litify (hat tip Nikolai Avteniev), an analytics firm in law that’s attempting to put automatic scoring into litigation finance. Litify advertises itself thus:

…Litify is led by an experienced executive team, including one of the world’s most influential and successful lawyers, well known VC’s and software visionaries. Litify will transform the way legal services are delivered connecting the firm and the client with new apps and will use artificial intelligence to predict the outcome of legal matters, changing the economy of law. Litify.com will become a household consumer name for getting legal assistance and make legal advice dramatically more accessible to the people…

What could possibly go wrong?

Book talk at Occupy today!

I’ll be giving a version of my book talk at the Alt Banking meeting today. Please come!

Here are the deets:

When: 2-3pm today

Where: Room 409 of the International Affairs Building, 118th and Amsterdam