The Value Added Teacher Model Sucks

Today I want you to read this post (hat tip Jordan Ellenberg) written by Gary Rubinstein, which is the post I would have written if I’d had time and had known that they released the actual Value-added Model scores to the public in machine readable format here.

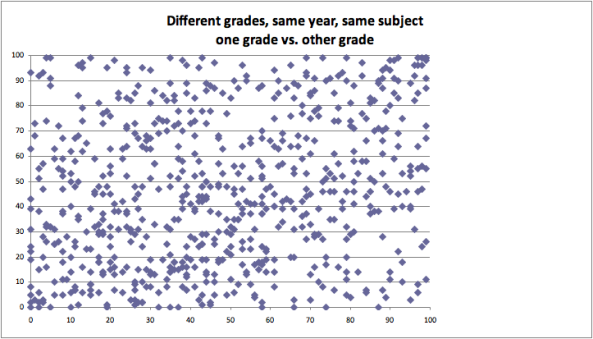

If you’re a total lazy-ass and can’t get yourself to click on that link, here’s a sound bite takeaway: a scatter plot of scores for the same teacher, in the same year, teaching the same subject to kids in different grades. So, for example, a teacher might teach math to 6th graders and to 7th graders and get two different scores; how different are those scores? Here’s how different:

Yeah, so basically random. In fact a correlation of 24%. This is an embarrassment, people, and we cannot let this be how we decide whether a teacher gets tenure or how shamed a person gets in a newspaper article.

Just imagine if you got publicly humiliated by a model with that kind of noise which was purportedly evaluating your work, which you had no view into and thus you couldn’t argue against.

I’d love to get a meeting with Bloomberg and show him this scatter plot. I might also ask him why, if his administration is indeed so excited about “transparency,” do they release the scores but not the model itself, and why they refuse to release police reports at all.

What on earth is going on? These plots don’t just look bad, but crazy, which is not what I expected.

Whoever approved the choice of this model owes the public either an explanation of why it is not as bad as this makes it seem, or an explanation of how such an apparently random system got chosen and why we can trust that future decisions will be made more responsibly.

LikeLike

Henry: Please please bring this up with Bill.

LikeLike

My reactions, in order: (1) This is insane. (2) My undergraduate students should know better than to trust a model which produces results like this. (3) Now I know what the kids will be analyzing for their next exam. (4) This is insane.

LikeLike

I’ve seen the decisions that get made in education. They are made by politicians, not statisticians or economists or mathematicians. The decisions are made by people who are popular, not people who are smart.

LikeLike

Maybe releasing this data to the public was the stupidest move the politicians have ever made. Let’s hope we can make it so.

LikeLike

Politicians didn’t release this on purpose. They KNOW you’re not supposed to pay any attention to these numbers until you’ve, at the very least, averaged over several years of consistent measurement (and even that may be enough.) But the data are public records, subject to Freedom of Information Act requests, and they had no choice.

LikeLike

If they KNOW they’re not supposed to pay any attention to these numbers etc.–and they not only pay attention to them but use them to guide decisions, both about educational practices and about hiring/firing–why the hell are they?

And when did teachers become everyone’s favorite scapegoat?

LikeLike

My guesses:

1) Because in politics it’s better to have bad ideas than no ideas (Gingrich has been around for how long now?)

2) Tough to say, but it probably started because the unions of most other professions have been weakened to near-irrelevancy. Now it’s just teachers unions and police unions.

LikeLike

Simple when you look at the cash flow in public education and what Bain Capital could do with this flow. CEO bonuses out the Wahzoooooooo!!!

LikeLike

Great post. Have you seen this:

http://www.washingtonpost.com/local/education/creative–motivating-and-fired/2012/02/04/gIQAwzZpvR_story.html

LikeLike

Err, I thought that just about everyone knew that there’s no way to tell luck (or call it “external factors not under direct control”) from skill (call it “what you can control”).

From that perspective, 24% correlation is not that hugely bad (i.e. it’s about to be expected) – althouh basing any perfomance review on this is clearly stupid (basing ANY performance review on two datapoints – or even three is stupid).

IIRC, CEO and their company performance has about 30% correlation (which translates to about 20% value add compared to a randomly deciding monkey. Clearly overpaid, but still worth some money). So in theory you could use this data and benchmark against CEO performance using the 30% correlation 😉

I’d be interested whether longer data series are still low-correlation or not.

LikeLike

Here’s another article for your readers to look at: http://www.nytimes.com/2012/03/05/nyregion/in-brooklyn-hard-working-teachers-sabotaged-when-student-test-scores-slip.html

This article describes one of the major mathematical flaws with value added test calculations: in high performing schools small declines in student performance result in large declines in “value added”… Perhaps your readers with a background in statistics could try to explain that to Bill Gates, one of the business leaders who is underwriting this move to value added assessments….

LikeLike

Bill should spend a bit of his money to hire a statistican, who then could explain to him that relatively large proportion of small schools at the top does not automaticaly mean they are better than large schools. Or, if he wants to do it on the cheap, someone should point him to http://en.wikipedia.org/wiki/Clustering_illusion

LikeLike

Since the purpose of the model is humiliation, for the policy goal of destroying the public school system, it seems to be achieving its goal. Pavlov taught us about the random infliction of pain, right?

LikeLike

Lambert is accurate…but this “infliction of pain” is not “random”-read Naomi Klein’s, “The Shock Doctrine-rise of disaster capitalism”…or ask Poly-Sci people who know 70’s-80’s

South-Central American history of U.S. imperialism. Or, read William Blum’s, “Killing Hope”,

or Perkins’, “Confessions Of An Economic Hit Man”, for “privatization” schemes…

LikeLike

As far as “datasource used in a statistically inept way to push forward preposterous models and conclusions”, the Bloomberg workstation ranks probably #1 . So what you are telling us is not astonishing me the least !

LikeLike

I retired two years ago after 35 years as a public high school math/science teacher. I came from a relatively weak union. If it was eliminated there would be a mass exodus. Just before I left I was given a taste of the value-added model concerning the “trajectory” of students. I think I got out just in time.

Just as NCLB guaranteed that 90% of schools would be “failing” in less than 10 years, the “trajectory” model would label 90% of teachers “ineffective” within a very short time. Yuck! Of course, we math snobs can’t stand it when some administrator who couldn’t add without a calculator is trying to explain how this all works.

LikeLike

the correlation you present suffers from a problem: you dont compare the same group of students nor the same content. so why expect a high correlation? teacher impact should count and it does but it´s not everything. this is saying it accounts for 25% of your variation, the other 2 sources i mention account for 75%.

LikeLike

Sorry, no. The point of these scores is that they are supposed to be measuring the value of the teacher. The point of the model is to take into account differences in students. And moreover, the teachers are evaluated on the basis of these numbers, not on the basis of the students.

LikeLike